Dissertation (what I thought 10 years ago)

This is my undergraduate dissertation. I wrote it over 10 years ago so a lot of things have changed. I think the thing that has changed the most is me! I cringe a bit at the wildly overconfident statements I make, and the referencing is terrible. As tempting as it is to correct it, the person who wrote it doesn’t exist any more so I’ve left it as is. There might also be some errors in formatting that have come in from the InDesign export process, mostly small caps becoming lower case.

If you want the original PDF then there’s a screen res copy here(8mb) and a full res copy here (73mb). Click more to get in on the html version, but it’s a pretty heavy page so don’t do it on the bus!

This got pretty badly mangled by a script that was supposed to convert my end notes from wordpress to jekyll. It’s still healing.

- Frank Gehry

- nox

- The Emergence And Design Group

- The Smart Geometry Group

- Marcos Novak

- Asymptote

- Greg Lynn

- Conclusion

- Glossary

- Notes TODO

This text will explore the use of computers in the design process, their move up the hierarchy of design tools, and what their new position higher up that hierarchy is making possible in terms of exotic form finding. Instead of merely producing purely sculptural forms, new computing technologies allow structural considerations to work symbiotically to produce true elegance in the architecture they produce when wielded by today’s architects.

The exponentially increasing processing speed of computers1, and the resultant advances in software and modelling techniques is allowing situations to be modelled in increasing complexity. It also enables designers to have more freedom in what they choose to represent, with fewer of the constraints of primitive computer technology. It is therefore important to understand how this new technology is affecting architectural design today because greater comprehension allows more imaginative use of these technologies.

Technology has always informed architectural design, and large technological advances have inevitably informed changes in architectural styles. Traditionally, from pre-history through to the classical and medieval periods, architecture was one of the major driving forces behind technology because it was a significant part of the war machinery of any state. Fortified towns, castles and other military buildings were as much a part of an arms race2 as trebuchets and cannons. As the battles became more high-tech, and the advent of gunpowder made traditional stone fortifications obsolete, other parts of society quickly took over as the driving force behind technological advances. Since then, transport (land, water and air transport) and later information (its collection and dissemination) have been the main impetus behind technological advancement, with other players such as agriculture industry and medicine also contributing, as architecture fell further and further from the cutting edge. Over the last few centuries technologies from other disciplines have cross pollinated into architecture and the prevailing paradigm has changed from one where architecture is a technological innovator to one where architecture is technologically bankrupt, scavenging the innovations that have fallen away from the cutting edge of other industries and trying to integrate them, usually inappropriately, into a ‘style’.

During the last few decades, computers have come to the fore in every field and are utilised in virtually every aspect of modern life, including architecture. However, computing is not just the digital work performed by computers but a general term meaning calculated that can include the complicated analogue calculations found in nature. Calculation is a process that needs to follow a set of rules, but this rule set can be anything from simple arithmetic to the complex non-standard systems found in nature. The arrangement of sand ridges caused by a receding tide on a beach is as much a product of computation as a cad rendering or the output from a spreadsheet.

Analogue calculations have long been used by designers wishing to simulate real world phenomena for example the catenary3 curves to arch force models used by Gaudí to compute the forces in the complex arches and columns in the Sagrada Família. However, it has taken time to bring about a unification between the number crunching abilities of a digital computer and these analogue calculations already involved in architectural design. The first digital computers were invented by Alan Turing4 to crack the complex enigma code, and early applications were all in the fields of mathematics or engineering because of their huge number crunching abilities in comparison to human mathematicians. Similarly the computer, as applied to architectural design, was to begin with simply a replacement or augmentation of the draughting already undertaken by hand on paper. The computer produced a collection of virtual two dimensional lines on a virtual two dimensional plane, with the express purpose of plotting onto paper using computer controlled mechanical arm with a traditional pen attached to it. It was a device designed specifically to mimic human action, even down to the details of the printing process.

Recently, in a series of evolutionary steps the digital computing process has gained considerable complexity through integration with technologies from other disciplines (engineering analysis, cinema special effects, physics simulation). The original two dimensional virtual space became three dimensional virtual space, and has now become n-dimensional virtual space, and the crude two dimensional plotting has become photo quality printing, and has even migrated into three dimensions with rapid prototyping and cnc machining. Moreover, although these technologies have only become commercially viable recently they have been researched extensively for some time so there are considerable advances already waiting on the sidelines to be integrated into real design projects.

The area in which the biggest advances have been made is that involved in understanding how the design, as opposed to pure mechanised draughting, can been enabled through software. For example, techniques and software from the cinema special effects industry have been used to generate forms with animated morphing, and virtual clay sculpting, borrowed from the automotive styling industry, has allowed styling and physics to be considered simultaneously. Complex physical models were used by Frei Otto and Ted Happold as analogue computers to develop minimal structures. By using the material properties of the model the complex analysis was largely done already and subsequent computer analysis of the resultant structures has shown this to be a very effective technique

In particular, iterative design techniques based on analysis of naturally occurring emergent systems have begun to be used at the very forefront of architectural design. The elegance derived from emergent systems is both beautiful and efficient, but to model them in digital space requires both immense processing power and also an intricate knowledge of the rule set governing the system being modelled, a use closer to the early engineering and mathematical applications of the early computers than to digital draughting. The modelling of very complex systems highlights the main difference between digital and analogue computing: anomalous results from a process in an analogue computer are generally due to a rogue variable, unconsidered due to too much complexity (e.g. neglecting the effect of Reynolds number in the miniaturization of airflow tests, or forgetting that the sun would warm one side of the model more than the other when testing a structure), whereas the opposite is true in digital computers where the lack of complexity is usually responsible for misleading results (e.g. neglecting to include an algorithm that includes joint friction in loading situations.)

It is possible to design an algorithm that will do just about anything, for instance designing a form based on the flow of people through a space, but more commonly people will modify a commercially available program with advanced modelling algorithms already built in. Cloth simulators, borrowed from cinema special effects, are one possible way of developing forms as UN Studio did at Anaheim Central Station. The results of these algorithms are interpreted as three dimensional models in programs like 3DStudio Max or Maya. Form finding exercises are only limited by the controller’s imagination, talent and ingenuity.

The design process now has an element of chaos introduced into it, a designer can design a machine or system for generating a result, and then, if the system is digitised and reduced to an algorithm, it can be allowed to run hundreds or thousands of times until an acceptable design is produced. In other words, results with certain characteristics, to use Darwinian terms, ‘fitness’, can be selected which can then be interbred to produce ‘offspring’ which are more desirable; generating an iterative process, the results of which tend towards a perfect design.

Parameters in a real system are all connected, even the very basic example of a simply supported beam relies on a large set of variables when it is examined; length, breadth, depth, cross sectional shape, material, manufacturing technique, surface finish, ambient temperature, and a host of others. These parameters all affect the beams load bearing capacity, and a change to any of them will affect the extent to which the others impact on performance. Parametric design then becomes very interesting. J Parish from Arup sport is planning to design a parametric design algorithm that will allow him to design an entire sports stadium in a day with all the considerations, from servicing to sight lines covered. The interesting thing is that the program won’t just design one stadium, but the ideal stadium given all the input considerations.

The question at this point in architectural history is one of uptake. Will the design community at large grasp the possibilities offered to make designs fitted exactly to the site, user and program, or will they be transfixed by the cheerful shapes and not look beyond and see the possibilities?

There are a number architects who have made significant contributions to architectural design in this field. This text seeks to explore advances in the field through the study of a number of prominent practitioners and the ways in which they have employed computer technology in the inception of their designs.

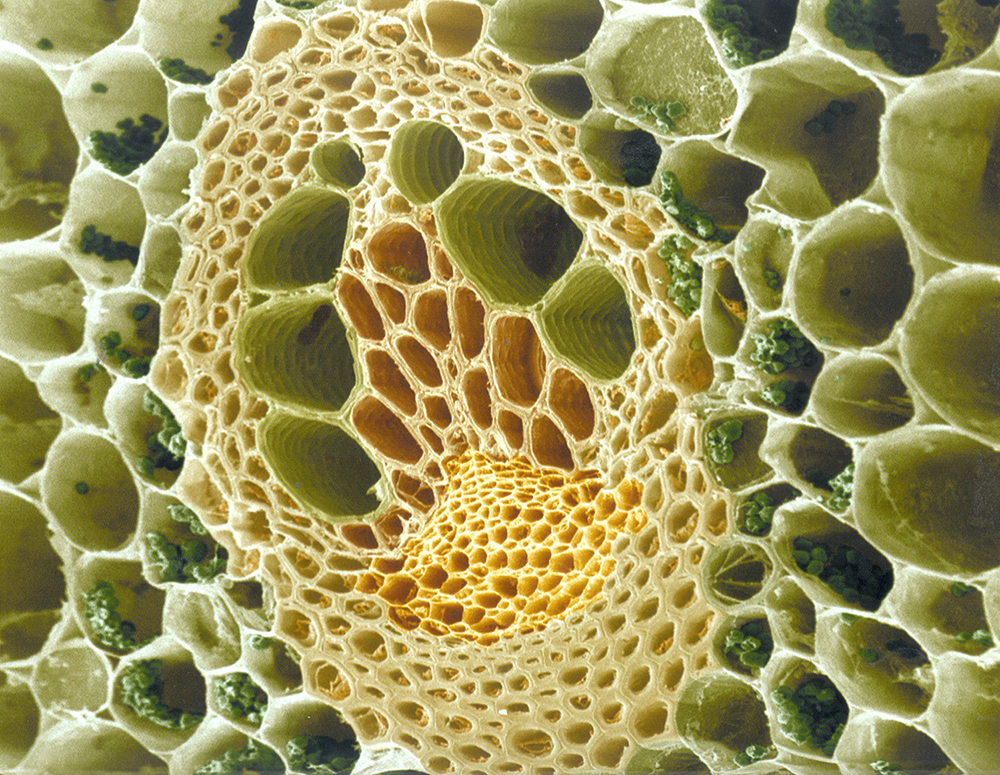

Section Through a Buttercup Stem

Missing sand

Sand Ridges After A Receding Tide

Frank Gehry

Frank Gehry was the first architect to utilise the processing power of computers in the production of built architecture. The resultant constructions have inspired the recent generation of architects to look beyond the familiar rectilinear and experiment with unconventional forms.

The iconic building is the Gehry trademark. Buildings like the Disney music hall in Los Angeles, the Bilbao Guggenheim museum and the bridge Gehry has contributed to Millennium Square in Chicago are all icons in their own right, a city accessory, and an extension of the Gehry brand. In the same way the latest handbag is an indicator of the wearer’s wealth, good taste and willingness to invest in the best for themselves, a Gehry building gives the same kudos to a city, and in an attempt to boost the cities image and media exposure and therefore tourism and economy many are commissioning their own icons. Several large cities have hoped to boost their economies using the so called ‘Bilbao effect’ to promote archi-tourism, and to give their city a presence in the world’s media. For instance Toronto has directly attributed a 2.3% rise in tourism to the new Will Alsop Sharp Centre for Design5. The Gehry brand uses large curving shapes and shiny metal skins, to create buildings more akin to giant sculptures or pseudo futuristic space stations than conventional buildings. The futuristic forms imply a futuristic design method. However for the most part computer technologies actually play only a small part in the design of these buildings and are only really brought into play once a physical model is built that the designers are happy with. As such the computer becomes more of a realisation tool than a design tool. The computer has little or no input into the design of the form6.

The current signature Gehry style had its inception with the fish sculpture on the Barcelona seafront commissioned for the 1992 Olympics. The design process involved in the sculptural composition of the fish sculpture began with the design and construction of a physical model using the traditional architectural techniques of paper modelling, a simple sheet of paper was bent and pushed until a desirable form was acquired. No computers were used at this stage. The initial model was scanned using a touch probe scanner, and then recreated in digital space using catia to allow it to be cut up and made into component drawings. At the time this was revolutionary, and the use of computers to create non standard forms seemed very exciting. In an interview with Dassault Systemes, Gehry’s chief partner Jim Glymph said: “The use of catia on our projects has extended the possibilities in design far beyond what we believed was possible ten years ago. We hope that within the next ten years this technology will be available for all architects and engineers,”7

Guggenheim Museum Bilbao

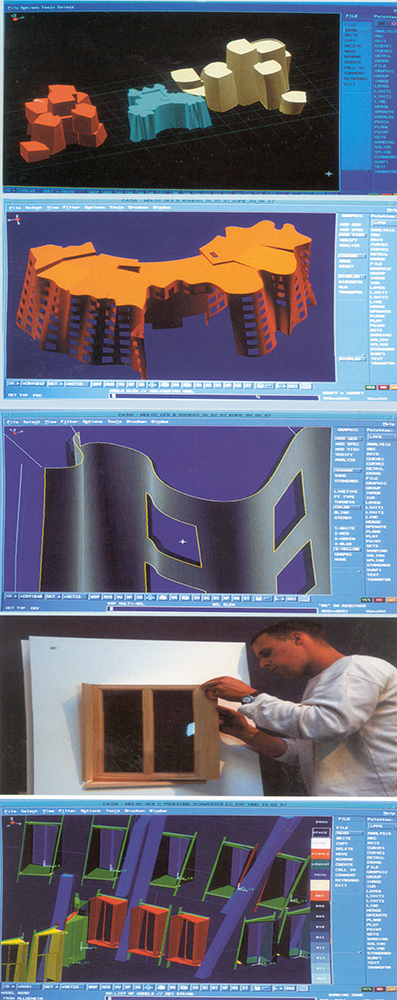

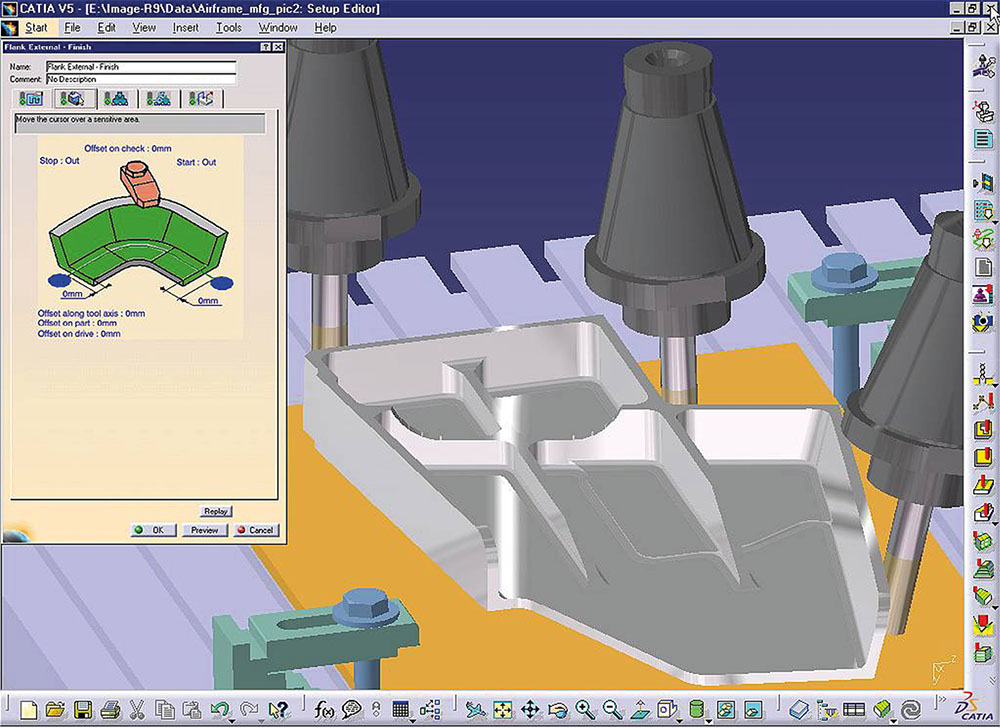

However what Gehry’s computers produce, in essence, isn’t anything new. They merely facilitate the expansion and extension of an existing process making the extended version financially viable by speeding it up. By using a computer to scan a shape that had already been designed and physically modelled, the complex drawings and calculations could be automated whereas the calculations and accuracy of specification for the metal work contractor would have proved too complicated and time consuming to be done manually, given it’s size and latticed copper construction. In essence the use of computers in the design of the sculpture was one borne out of necessity because the calculations and subsequent drawings and specifications necessary to make and support a physically viable structure, without the use of the vast number crunching ability available in a computer was hugely time consuming and not possible to acceptable levels of accuracy. Once programmed with sufficient physics information a computer is a far more reliable engineer than a human because they can employ finite element analysis and other necessary calculations far more rigorously and the drawings produced by computers are more accurate. The real benefit of computer draughting is where there are no drawings at all produced and the computer communicates directly with the manufacturing device (be that a multi axis mill, a laser cutter or a rapid prototyping machine). catia is a particularly powerful program that can do this, borrowed from the aeronautical industry where it is used to design fighter jets. It has a vast range of plug-in accessory modules that allow it to be tailored to one’s needs.8 Gehry uses catia to design and specify all the underlying structural componentry required to support the buildings.

Gehry’s computing techniques were ground breaking at the time, and something that had never been done before. They opened up a host of new possibilities in architecture and the possible architectural forms available to architects to use beyond modifications of the traditional cuboidal shape. These possibilities were extremely exciting for the architectural community and allowed architecture to take its place amongst the physical art forms and made buildings as sculpture possible, extending their value beyond that of practical purposes as shelter. Many of Gehry’s subsequent buildings have used the same technique, and as a result look very similar to the Bilbao Guggenheim, for example the Walt Disney Concert Hall and the Experience Music Project . More recent building projects have used the power of catia to a certain extent, but this is generally in automating the insertion of windows or the specification of steelwork. The Der Neue Zollhof office buildings (1999) are a good example of the more recent Gehry work flow. The form was realised as a physical model, scanned, and then the structure was added as an afterthought and the windows were inserted using a catia subroutine that oriented them towards the most pleasing views. The structural data was the outputted to cnc mills that made the polystyrene moulds for the concrete skin and the steel work data outputted to the contractor.

DG Bank, Berlin

The evolution of the Gehry design technique seems to have stopped soon after the introduction of catia, all his subsequent buildings have used the design, model, scan, support technique. However the inherent human aspect involved in the model creation and the non-involvement of the computer in the design process leads to structurally inefficient buildings which require massively more structure than an equivalent rectilinear building. Most famously the Bilbao Guggenheim museum which looks spectacular from outside, and has had an enormous impact on the economy of Bilbao, but inside is a confused mass of steelwork which contorts itself wildly in order to support the museum’s complex shell.

The design process used by the Gehry office is merely the hand draughting and engineering process speeded up by using computers, the structural data which is generated by catia and ansys is not used to modify the form or design, but merely to specify the structure. It is essentially computer aided drawing, as it was at the dawn of Autocad, or even computer aided sculpture, but the computer is, essentially, left out of the design process.

The Gehry style is essentially a computerisation of the ancient technique of doing a scalar transformation of a three dimensional shape such as that used in Renaissance sculpture. Starting with a small object he makes it a big object. The computer is left out of the design procedure completely, and as such following the Gehry method of design, while not a regressive step is certainly not a step forward.

Frank Gehry’s passive physical modelling can be seen as an early step towards the integration of physical and digital forms. The physical form allows the designer to see exactly what the end product will be, but it leaves no room to allow the computer to do any iterative structural correction or interpretation. Simple physical models have been used for centuries to define forms too complex to model mathematically, but with computers these models can be digitised and modified in digital space, a feature Gehry has not taken advantage of. The exciting prospect now is designing the active physical model or ‘machine’ and then to digitise this to allow further evolution of the design to take place.

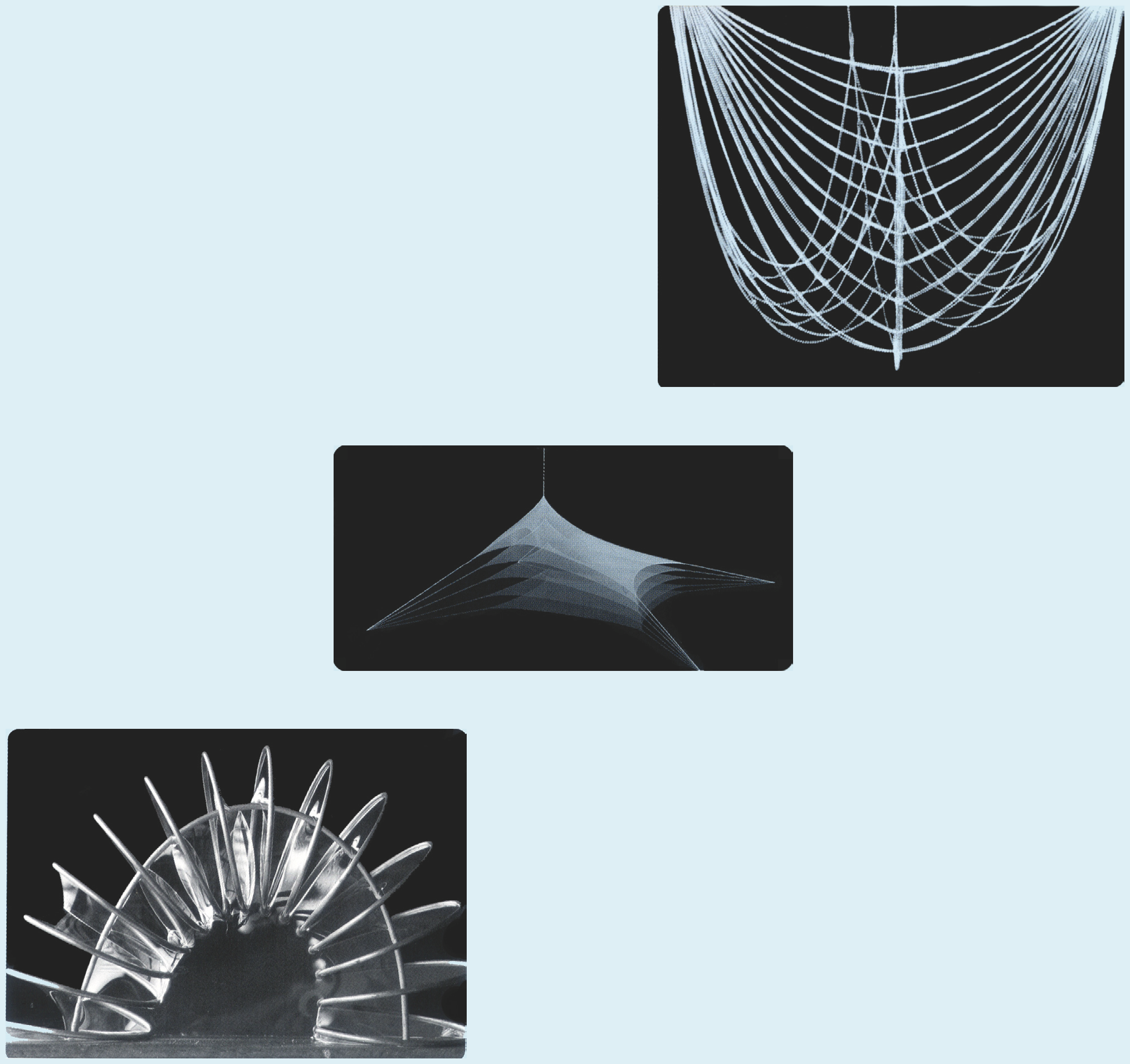

Reiser+ Umemoto Catenary Experiment

[nox] Lars Spuybroek

In contrast to Gehry’s passive use of models his designs,nox use their models in an active way, by producing analogue computers and interrogating the model’s material properties to derive forms and strategies. The work of this Dutch practice is very much biased towards the design process, which gains as much media attention as the actual buildings themselves although for such a conceptual practice they have a surprising amount of built work.

The principle, Lars Spuybroek is Professor of Digital Design Techniques at the University and GhK of Kassel, a visiting Professor at Columbia University, Bartlett School of Architecture, Technical University Delft, and Other academic institutions throughout Europe.8 This academic involvement is evident in the way that the student research that Spuybroek supervises feeds back into the practice’s own work. For instance, the competition entry for the World Trade Centre replacement used techniques developed through his teaching in the USA and Germany9. nox have always been interested in the way media, and specifically computing relates to architecture. Their early work in association with the V2 media lab in Rotterdam created installations for exhibitions worked on this interplay, mixing architectural forms with multimedia. Many of their buildings have a strong interactive element as well as unconventional built forms. Their H2O freshwater pavilion has both an unconventional form using a portal frame that morphs from a octagon to a rectangle in section, and contains a fully interactive interior with sounds, lights, projections and environmental variables (water sprays, mist etc.) all dependant on the movement and volume of visitors.

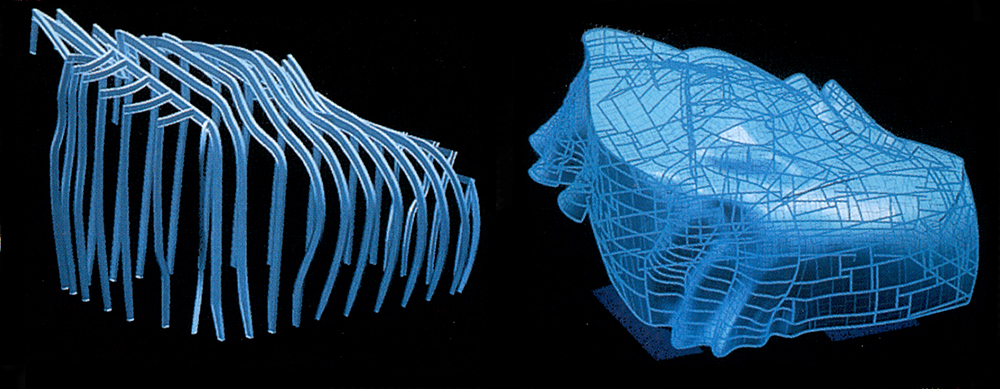

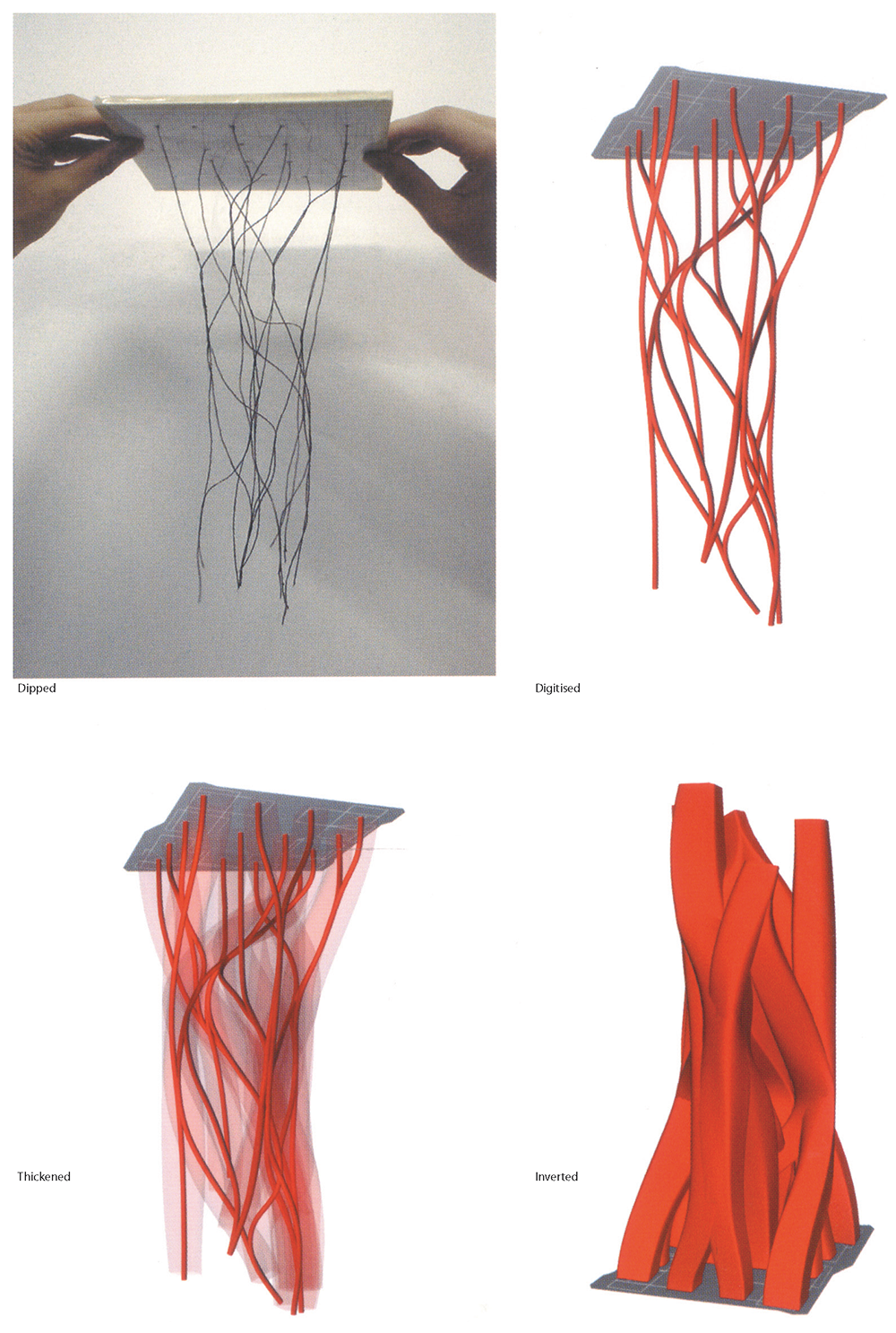

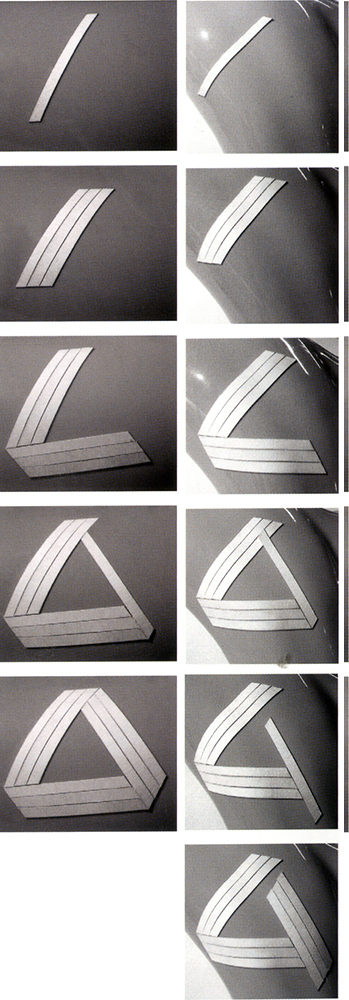

They are influenced by the structural forms of Frei Otto10 and Antonio Gaudí, and their design process is one of making a ‘machine’ that embodies the brief, using the machine, then working out the rules that govern it, writing an algorithm that describes those rules, and then using a computerised, and therefore more controllable version to produce a design. The machine isn’t a fixed item, but rather an object that needs to be designed according to each individual brief.

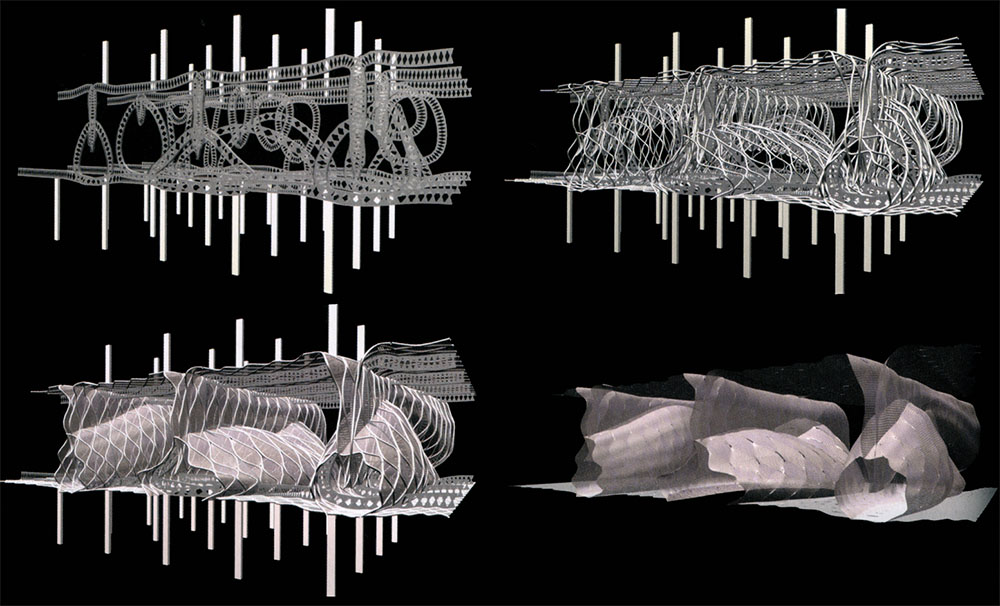

The machines generally take the form of an analogue computer; the entry for the World Trade Centre design was based on a machine that used hanging woollen threads. These threads were immersed in water and removed slowly,.The surface tension of the water and the cohesion effects produced by the wool’s surface finish caused the threads to stick together in places. nox then studied and replicated the rules that govern that process and use a digital computer to recreate the analogue computer in a more controlled way. Where the wet threads met defined open meeting spaces, nodes in the building. However the initial model produced too many of these to be commercially viable, so the digital model was used to reduce this to a viable number by telling the computer to run the simulation of the wool thread process many times, but only to display results with the required number of nodes.

nox World Trade Centre Wool Thread Machine

If you accept that all natural phenomena are controlled by physics, then a set of rules, or physical principles can be established that describe any action witnessed. This allows the design to be reached much faster because the physics can be replicated in the computer so that the architect needn’t dip a thousand permutations of woollen threads to achieve the optimal design . The digital process can be repeated many thousands of times extremely quickly and will eventually produce a form which fulfils all the briefs requirements in addition the digitised version can be precisely controlled, whilst still retaining the essential qualities and the original concept of the design.

The main advantage of this approach using gravity as one of the main instigators in the analogue computing process, becomes apparent when considering that gravity is a major force in the real world, and so the forms derived from this analogue process will generally reconfigure themselves to take account for gravity making an efficient structure. Generally the forms generated from inverted hanging machines automatically do the engineering analysis, however at the scale nox’s models are generally built at, attractive and repellent forces such as surface tension and static electricity also have an effect, but the resultant form is configured to take all of these into account. The implications of material choice when constructing the analogue computing machine is that the “Materials, already informed, yield geometry as a consequence of their materiality”11 meaning that the nature of the material will affect it’s behaviour in the analogue calculating machine, so the decision to use 80gsm or 120gsm paper, or pvc, leather or wool threads in the design of the machine is important. Equally, the finished product will also be ‘material’ so the inferences drawn from structure can directly related.

The D Tower in Doetinchem in the Netherlands (1998- 2004) was designed using a combination process. The top was designed by deforming a sphere in an animation using an algorithm, and “At the point where the sphere, which starts out convex only, seems to have as much concave geometry as convex, [they] suspend the animation.”12 Once the top section was fixed a shopping bag analogy was used to design the legs. A mesh shopping bag reconfigures it’s arrangement depending on what is being carried at the time, the top of the D Tower was conceptualized as the shopping and the bag becomes the legs. This was inspired by the idea of using hanging chains to design parabolic arches and force diagrams, originally used by the Romans to design bridges and most famously by Gaudí to design the Sagrada Família. Traditionally floor planes are fixed horizontally, the model inverted, and strain gauges in static cords used to measure the tension and determine the diameter of each leg. However the top section of the D Tower is not inhabited so it’s orientation is unimportant, instead the legs diameters were fixed by using an elastic cord, and the top allowed to rotate to accommodate the forces. This is a simple but effective application of analogue computing.

nox Shopping Bag Analogy For The D Tower

A rule defines the pattern that covers the surface of the Sonohouse, regular surfaces (above) give regular tessalation, wheras irregular surfaces (right) give broken tessalation.

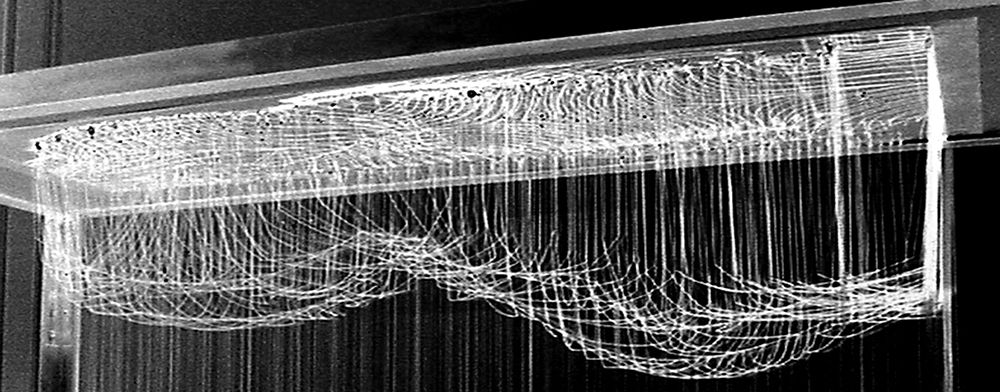

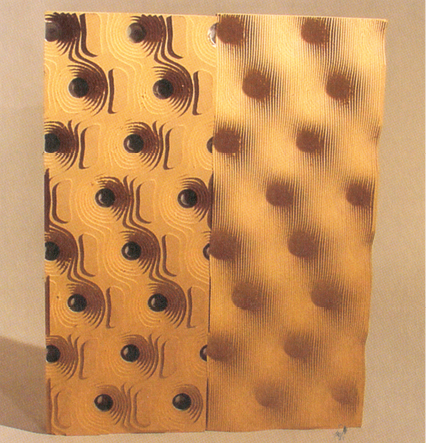

As many of their projects have complex double curved forms, the methods used to cover them become more problematic. For Blowout (a public toilet in Neeltje Jans in the Netherlands, 1997) the skin was simply covered in mesh and sprayed with concrete, creating a homogenous surface. The more recent Son-O-House13 has a much more complicated skin of expanded mesh that is applied according to a set of rules given to the contractor. The rules govern the positioning and tessellation of a series of panels and the resulting surface has a pattern produced by the orientation of the cuts that produce the mesh and the resulting moiré effect is very interesting.

The nox approach embraces indeterminacy, their Iterative computational experiments yield unpredictable results and are pushing nox on to produce more and more innovative designs. The use of computers is very much evolutionary because even though they are repeating many of the experiments performed by Frei Otto, they are applying the computer in a way which takes them out of the realm of playful experiments and places them firmly into that of useful architectural tools. These approaches allow nox to control the overall design process, but the points at which they let go and allow the machines to take over creates unexpected and elegant solutions.

nox Son-O-House

The complexity involved in the final form of something like a wool thread model can usually be reduced to a surprisingly simple set of rules. The study of complexity generated from simple systems becomes very interesting, positive or negative feedback can send a system off into total chaos, or keep it from really doing anything. These mathematical concepts can be applied to natural phenomena such as the wax and wane of caribou populations10 or the arrangement of seeds in a sunflower head.

So if nature holds such fascinating structural mathematics then what happens if we abstract those rules and apply them to architecture? This is exactly what the Emergence and Design Group have done.

[Emergence and Design Group] Micheal Hensel, Mike Weinstock, Achim Menges

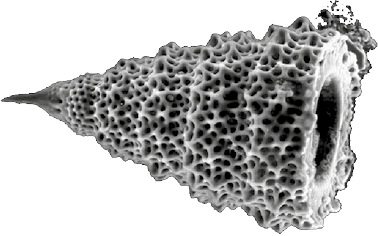

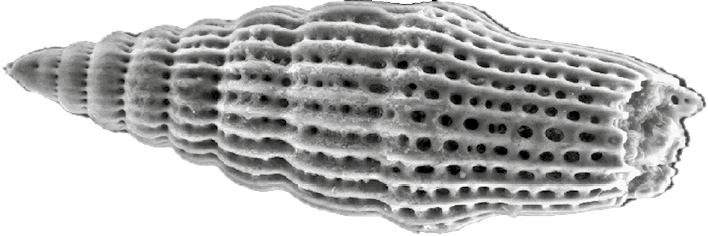

In 1917 D’arcy Thompson published a book called ‘On Growth and Form’ which studies the structure of complex single cell organisms11. This, in part led to bioconstructivism, which looks at the ways in which nature has organised itself, such as the tessellation of pine cone ‘scales’ based on opposing helixes, and the ways in which broccoli florets arrange themselves according to the Fibonacci sequence. Emergence is a rigorous application of mathematics to these findings from nature, and a subsequent reapplication of those principles to other aspects of life, such as the development of artificial intelligence, or predicting stock market fluctuations. Until recently the term ‘emergence’ was generally used as a buzzword in architectural circles to refer to any seemingly complex system. Recently however, it has been steered onto the more stringent definition as “a system that cannot be deduced from its parts”12.

The most commonly cited example of the application of emergent design to architecture is termite mounds. Termite mounds have a complex system of tunnels that the termites use for their daily lives, but they are also used by the termites to carefully regulate the temperature of the whole mound by blocking and unblocking external openings, depending on the prevailing winds and the internal ambient temperature. There is no central control from the queen, and the termites themselves have no concept of the global situation, but as the termites are essentially identical, if one of them gets cold then it simply blocks up the nearest hole, and then unblocks it when it gets too warm. As the termites work to a very simple set of rules, and each of them has the same programming, the system runs efficiently.

Termites use an even simpler set of rules when building the mound in the first place.

Whilst wandering randomly

If you find something

then pick it up

unless you’re already carrying something

in which case drop it

They change the environment and the changed environment modifies their behaviour13.

A termite finds a piece of wood and then caries it around until he finds another piece of wood, then he drops the piece and continues wandering around until he finds a third one and he repeats the process. As time passes many small mounds are produced which develop into fewer and fewer larger mounds until one mound will grow more than others. The larger mounds have a proportionally smaller surface area to volume ratios compared to the smaller mounds. Therefore, in the larger mounds, a greater proportion of the mound’s pieces are ‘protected’ from collection by being in the interior. As a result the larger mounds tend to grow and the smaller mounds to shrink until only one is left.

Termite Nest

Emergent systems tend to be very complex, but the complexity is created by a large number of iterations of a very simple set of rules. The Spirograph sets that children use are a very simple example of this. The rules involved in the creation of the pattern are very simple, but the actual pattern created is much more complex.

The Emergence and Design Group is attached to the Emergent Technologies and Design masters program at the Architectural Association in London, and their work is essentially research based, trying to “produce a new research-based model of architectural enquiry”14, rather than the formal, or concept led design methods of recent years.

The Emergence and Design Group look to nature to find their rule sets. Evolution has had millennia of gradually evolving structural precedents, and due to the autonomous way in which cells function these natural structures grow according to very simple rules. For example plants lean towards the sun, thereby maximising the area of their leaves exposed for photosynthesis. They do this without any central processing unit, simply by using the turgescence of certain cells that are activated by the exposure, or lack thereof, to light15. If these growth formulae can be determined then they can be extracted and reapplied. Once the group has sufficiently abstracted the rule set that governs the structure of interest, they apply it to an architectural form and use a computer program to grow and evolve a design.

The research that the Emergence and Design Group are doing uses computers to produce simulations of systems that would be inconceivably time consuming to calculate manually. The resultant insights taken from these simulations are allowing for new ways of conceiving structures, evolving architectural design into new areas

Radiolaria - single cell organisms with complex structural organisation

Romanesque Broccoli Florets Displaying Fibonacci Ordering

The Smart Geometry Group

Lars Hesselgren (KPF), Robert Aish (Bentley), Hugh Whitehead (Foster and Partners), J Parrish (Arup Sports).

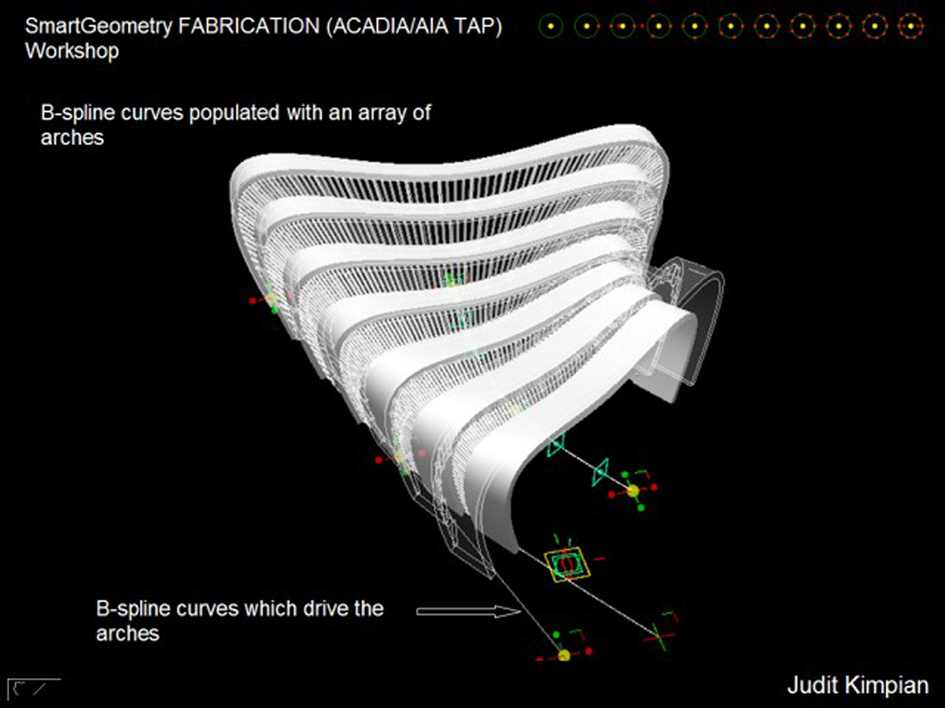

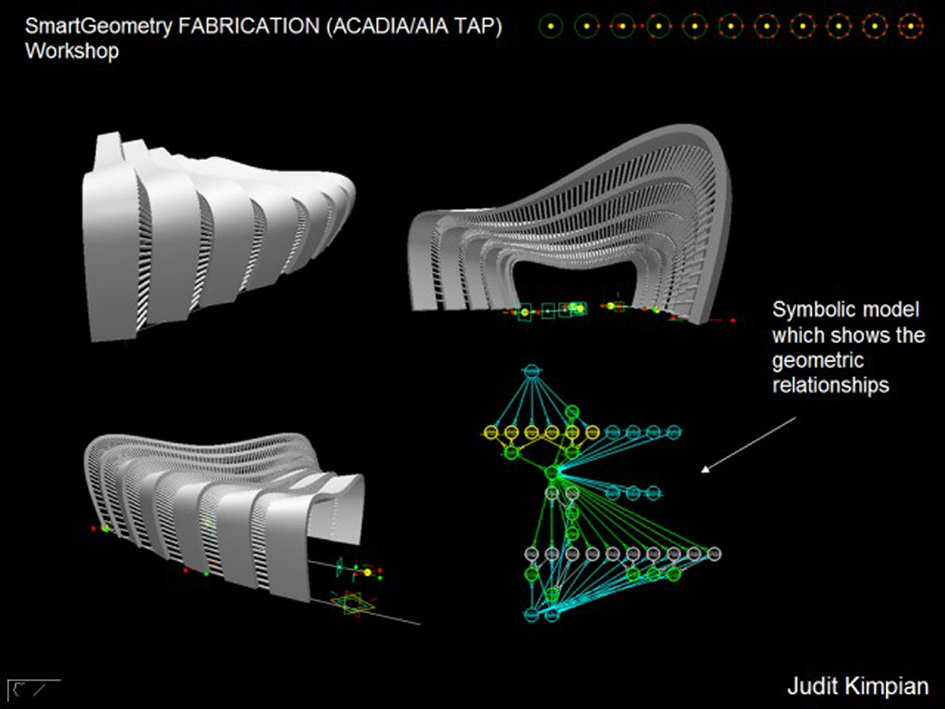

The smart geometry group is a fairly loose collection of people all working at a very high level in parametric computer modelling. They are generally concerned with educating the new generation of architects in methods of parametric design, and also pushing the envelope of parametric design itself. They work predominantly with a new software package that is as yet unreleased, called ‘generative components’ which is made by Bentley Systems (makers of Microstation22), who also sponsor the group.

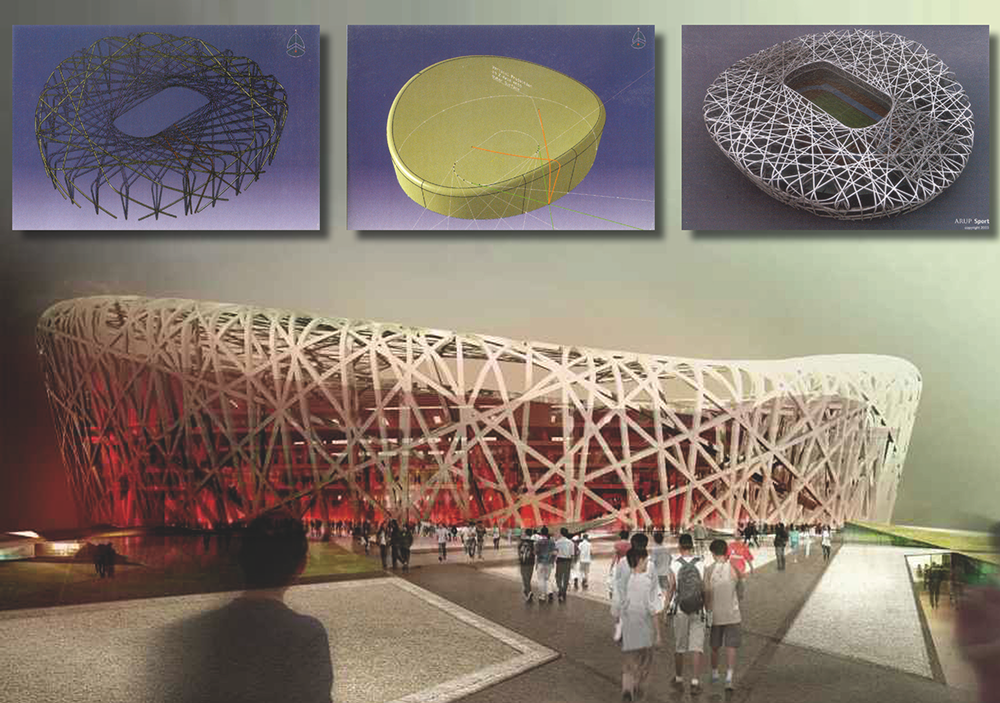

The program allows some very simple rules to be set up to produce much more complicated geometry. By manipulating the inputs to those rule algorithms, one set of rules can be used in a great number of situations to produce significantly differing results. J Parrish has been designing parametrically ever since programmable calculators were released when he codified an algorithm for working out the pitch of stadium seating banks. Since then he has been working for Arup Sport designing sports stadia including the Cardiff millennium stadium and the stadium for the 2008 Beijing Olympics with Herzog & De Meuron. The parametric nature of the design method allows him to design, in his own words, “a significant amount of a stadium whilst waiting in an airport.”23 Realistically this means that because much of the hard work in figuring out the details of the design is calculated by the algorithms in a few minutes, rather than a month as it would be if it was being done manually, many more iterations of a design can be produced. In turn this means that it is financially viable to test all the possible options rather than picking the first one and hoping it will be the best. Initially the parametric algorithms were limited to designing the sightlines for seating arrangements, but they have now become very advanced, and now include egress, concessions, structure, circulation, roof structure, etc. Arup Sport can now have an entire stadium designed, modelled and even animated in three days. Parrish’s real ‘dream’ is to design a stadium in one day, and then be able to design another the next.

A Parametrically Designed Stadium Bowl

The Beijing stadium has a complex ‘bird’s nest’ structural arrangement, and to detail the connections for each of the intersections, and to make sure that the network actually functions efficiently structurally, parametric design was employed. They used catia to design, to a certain extent, the bird’s nest’s actual configuration once it’s overall form was set by the seating arrangement in the bowl (which was itself set parametrically). catia interrogated each member and then outputted the exact detailing for the contractor to bend cut and drill the relevant parts.

Beijing Olympic Stadium and catia screenshots showing design methods

The new proposed development for Kowloon’s waterfront uses gc (generative components) extensively, both in designing the form, and also in detailing it so that it actually works. The huge level of highly controlled automation allowed Foster & Partners to produce a virtually finished competition entry on a very quick time scale. Once the concept had been formulated gc was used to design the actual form and the construction detailing. The program allowed the designers to pull the design around as if it was a malleable physical model using it’s parameters giving a much greater degree of design resolution than would otherwise be possible.

The group has been running a number of seminars and workshops over the last 2 years to introduce some of the industries top designers and also some students to the possibilities offered by the beta version of gc. They assert that, with gc one is modelling a set of relationships between points, lines and planes rather than geometry, and the geometry is almost incidental. Ironically mathematicians in some fields see this as quite an accurate definition of geometry.

The methods of producing architectural design researched by the Smart Geometry Group are not only allowing for the automation of the menial and time consuming, but also for the design to work in tandem with the structure. This thereby brings the architect and the engineer back into dialogue throughout the process and allows designs to become more innovative and interesting with elegance an inherent part of their design, rather than creating a battle between architect and engineer to get the desired result.

The Smart Geometry Group’s use of parametric algorithms to enable and accelerate design is probably the theoretical research most likely to become mainstream. Within the next ten years the concept of architect as tool builder will become a reality simply because of commercial pressures. Their techniques can allow designers to produce mediocre designs quickly by using ready made tools. However the true future of the software will be in the opportunities for using the tool building process as a design task in itself.

Parametric Study For a Sculpture - Dr Chris Williams

The most significant creation of the information revolution has been the birth of the internet. As modern life becomes increasingly dependant on the interactive interfaces, and the vast wealth of the information stored within, the way that we interact with computers becomes a concern. Do we look at them or do we inhabit them?

TODO missing green and black chip image

Marcus Novak

Novak has been involved in researching and conceptualising the use of computers in architecture since the late seventies. He has held various positions at American universities researching the architectural implications of digital technologies, and has been at the forefront of the research into virtually all the computer technologies that are applicable to architecture today.

Imbued with a classical Greek education Novak was fascinated from an early age with geometry, music, art, logic etc. He eventually settled on becoming an architect and struggled to reconcile his interests with his chosen profession, urged by many to discard some of them to focus on others. Refusing to do so he attempted to make an architecture that encompassed them all.

As much of Novak’s work is purely research based it allows itself to push the boundaries of what is considered architecture into art, computer graphics and mathematics. He stresses the importance of being “intellectually multilingual”16, as are an increasing number of architects who are pushing the boundaries of what the computer makes possible. The ability to speak the languages of other disciplines is allowing the conceptual and technical advancement of those disciplines to enrich architecture.

Generative composition has been an accepted practice for a long time in music and Brian Eno’s Koan17 project was the first to bring it to the mass stage. Novak took these algorithms that generated musical compositions and modified them to work on spatial dimensions. His theory of ‘liquid architecture’ is based on the way music is formalised. The parametric nature of the classical orders inspired a way of thinking about architecture as a set of parameters that was lost on most people at the time, but has come to be one of the most important concepts in modern computerised architectural design. He attempted to create computer code that would recognise beauty, long considered a dirty word in intellectual circles due to the illusive nature of a rigorous definition. He used an amalgamation of several previous methods taken from different fields18orms were indeed beautiful and elegant, but did contain objects floating in space and other such unbuildable forms.

Through his teaching and research, Novak discovered that the early tools designed for a generic designer were inappropriate for most applications because most designers were working in non-generic situations. He started making his own tools and integrating computers into his teaching at Ohio State University five years before Bernard Tschumi set up the ‘paperless studio’ at Columbia. His theory was that if architectural form could be freed from the economic restraints that it is currently tied with by using these ‘tools’ then form would become regulated by human preference.

Alien Bio

simulated binocular vision and the head movement based navigation system removed the degree of dislocation associated with the ability to look beyond the two dimensional screen and from using hands to move the field of vision. This was the first significant foray into inhabiting cyberspace in an architectural manner, and although most of the environments conceived were ‘unbuildable’ in the physical world it set a precedent for other architects to follow.

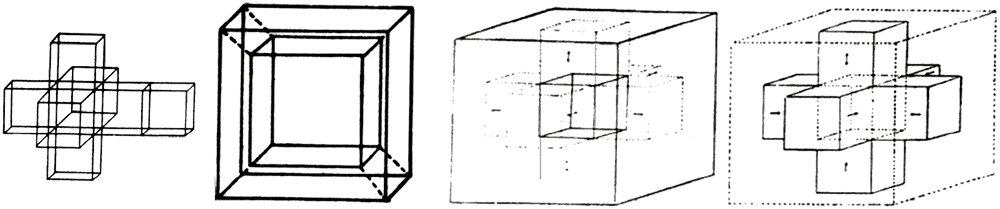

With the constraints of having to design for the physical world essentially removed, Novak experimented with complex geometrical concepts in the virtual world. The first obstacle to transcend was the obvious barrier of multidimensional space, his complex parametric four dimensional designs yielded hyper-surfaces as the resultant three dimensional form. This allowed him to create architecture that doesn’t conform to our conventional Euclidean view of the world.

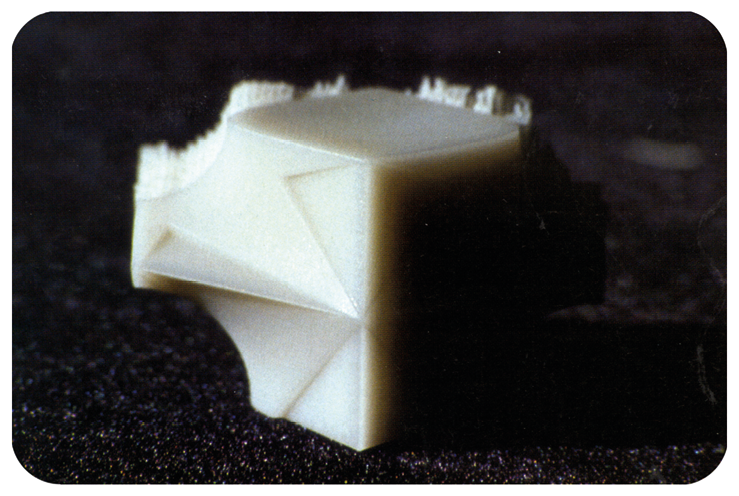

A Rapid Prototyped Alien Bio

A Rapid Prototyped Alien Bio

Novak’s work continues to explore the boundaries of what digital technologies make possible, the inhabitation of virtual space is now a significant issue in architectural discourse. Most of Novak’s work is highly conceptual, and beyond the understanding of the general populous.

Asymptote, however are creating virtual spaces that blur the boundaries between the real and virtual worlds.

Asymptote - Hani Rashid & Lise Anne Couture

Asymptote are involved in exploring the boundary between real and virtual space, their work either straddles this boundary, or remains entirely in the virtual domain. Hani Rashid and Lise Anne Couture have been pushing the boundaries of what can conventionally be called architecture for the last nine years. They courted major media controversy for their New York Stock Exchange Virtual Trading Floor (nyse 3dtf). The nyse 3dtf was a virtual environment for trading stocks, but the question of whether or not a space created inside a computer, that cannot be physically inhabited, could be deemed architecture whilst still inside the intangible realm of virtual space was fiercely contested.

i:scapes Venice Bienale 2000

Ever since William Gibson invented cyberspace in his 1984 book Neuromancer19, people have been discussing ways of inhabiting this space. In cyberspace the traditional constraint of physics no longer control one’s actions and it is genuinely three dimensional (rather than the two and a bit that we inhabit in everyday life due to the way our physiology constrains us to resting on, or briefly above, a horizontal plane), virtual objects can be positioned at any point. In virtual space time isn’t a factor as it is in real space, because the computers clock can be turned to run backwards or even stop if required.

Asymptote have tried to formalise how people would interact with a 3D virtual space construct without a priori knowledge of how to operate in completely free space. In the nyse 3dtf20 the final design has a plane defining the lowest point visible, easily recognisable as a floor, which gives users a datum plane to orient themselves. However, as normal physics does not apply, in order to display greater detail, or to view two pieces of information that are not initially together - such as stock prices and news reports or demographic data overlaid onto share trends - they have used temporal displacements and distortions to allow initially disparate parts of the space to be viewed together. This is essentially a simulation of real space (with a floor and walls) but taking advantage of some of the opportunities offered by the computerised environment to create a more legible data structure. The fact that the floor plane is deemed necessary shows the early stage which virtual environments were at when it was designed, and it seemed necessary to approximate an easily recognisable architectural interior in order for it to be deemed architecture rather than simply a graphical user interface (gui). The temporal distortions are the first excursion into the different properties of this alien space, but in general the environment is reasonably constrained to conventional inhabitation norms. In light of the success of the nyse 3dtf they were commissioned to refit the physical nyse building, in response they fitted a vast array of data screens and access points to the 3dtf in order to bring the virtual into the real space. The 3dtf can be seen as an augmentation to the real trading floor, or, as is being seen more and more with the rise of internet commerce the real floor is seen as a physical representation of the 3dtf.

TODO missing NYSE image

3d nyse</figcaption>

Another example of this technique is the Virtual Guggenheim Museum where even the constraint of a horizontal datum was removed, and the viewer (operator, user, inhabitant) is free to move and rotate 360° in any plane in order to view the virtual and internet art ‘objects’. This project shows how Asymptote, and general attitudes to the inhabitation of virtual space, have moved on, in that one is no longer constrained by virtual gravity or virtual inertia. The Virtual Guggenheim was conceived as a native environment to experience artwork for which the intended means of display and distribution is the internet. The concept of a computerised three dimensional space is difficult, as virtually all computer screens are viewed in two dimensions, but even in life we see a perspective or other projection of a three dimensional object displayed on a two dimensional plane. The eye has only a two dimensional plane onto which light from real three dimensional objects is projected and the brain is confused into believing in the medial immersion. This allows virtual space to be believable rather than simply being a gui. This project enables the difference between virtual building and virtual architecture to be defined, where a virtual building is a computerised visualisation of an existing or un-built building, whereas virtual architecture is an virtually conceived and defined space that takes advantage of the removal of the special and physical constraints, whilst being mindful of the limitations in the number of senses engaged whilst experiencing it. The concept of the site as a space or area that defines where people can experience the architecture is stretched when the influence of the internet is considered. In this case, if the architecture has a virtual component, then immersion and experience is extended to anywhere that can access the internet. In the Fluxspace 2.029 installation at the 2000 Venice Biennale (where Asymptote contributed to the usa pavilion along side Greg Lynn) there are a pair of opposing fish-eye digital cameras which take a picture every 30 seconds, and the large revolving mirrors ensured that the photos were never the same. This is a fairly basic method of integrating virtual and real space, but with the advances in technology and architectural thought, more considerable interaction is likely in the future.

Asymptote’s work integrates the computer into the architecture, and sometimes the architecture into the computer, allowing the two to exist side by side and interact. Their method of work is bound to become more widespread as clients demand buildings that can interact with the ‘information age’. Also as people begin to appreciate the possibilities for inhabiting the internet rather than just viewing it, defining the spatial characteristics of cyberspace will become a pressing issue.

Virtual Guggenheim Museum

As Asymptote’s work explores the extensive realm of virtual space and how it bleeds into and interacts with real space, Greg Lynn is focused on the intensive procedures of fabrication and is concerned with bringing designs that exist in virtual space into the physical world. Although they are at the opposite ends of the spectrum of digital design their work overlaps in it’s research methods, both Rashid and Lynn teach in order to force the intellectual side of their work along much faster than if they relied on envisioned clients to fund experimentality.

TODO missing motorbike

Form - Greg Lynn

Greg Lynn’s fascination with fabrication seems a little odd when considered in the context of digital design, but it becomes clearer when you consider that he is addressing the opposite side of the equation to most of the other practitioners in the digital realm, and attempting to bring the shapes designed in Maya and 3D studio out of the digital realm and into the physical one.

Nobody has addressed the issue of the creation of digitally designed spaces quite as much as Greg Lynn. The majority of problems faced when translating complex non-standard geometry from a computer into a physical object have already been surmounted by automotive, aeronautical or nautical transport industries. The ability to make any object that one can conceive pushes Lynn to conceive more and more complex shapes as his thought process is not limited by the conventional boundaries of what is possible, and by using the dense network of experimental fabrication facilities in California, Lynn is able to create virtually anything. Nearly all the concept car workshops and most of the Hollywood special effects props industry are based on the west coast.

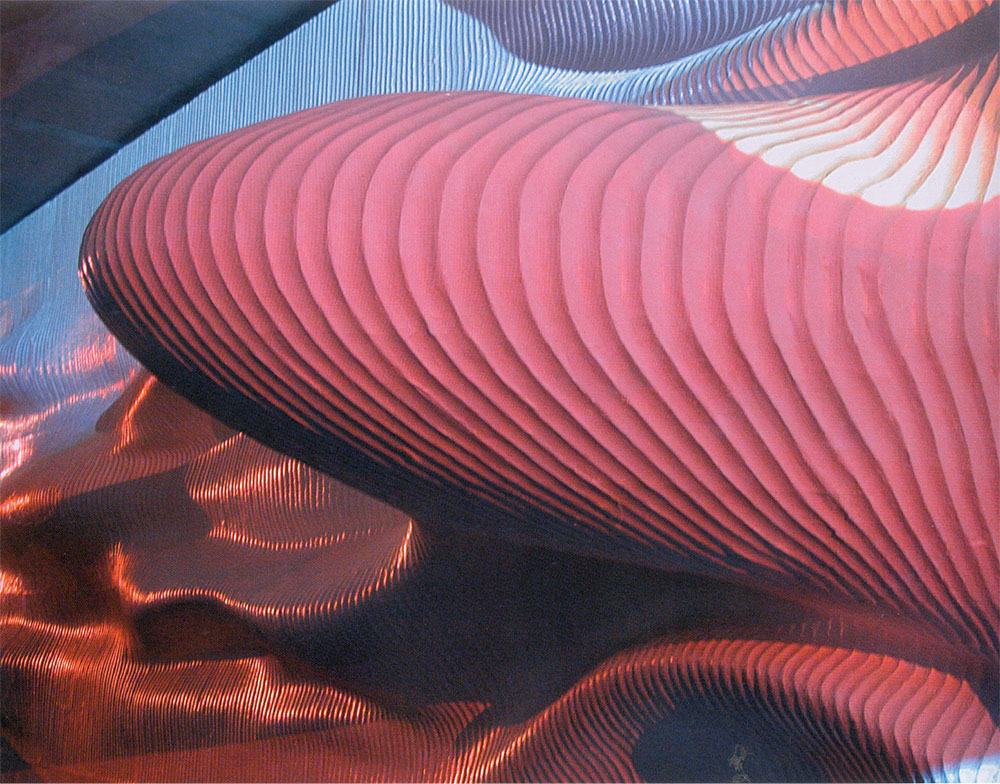

Chess Board: fabricated using the technology behind concept car headlamps.

With Lynn’s architecture taking a step into the world of machine fabrication, the final surface finish comes into question, just as when using an animation technique to find a form one must chose a moment to freeze the frame. The point along the manufacturing process where one decides that the object is finished fascinates Lynn. The intellectual decision of what defines decoration is called into play here; is an articulated artefacted surface more decorated than a smooth polished one if the polishing is an additional step in the objects production, or is decoration an addition process. Lynn explains the concept succinctly in the chapter introduction to “intricate pattern, relief and texture” where he explains how the tool path defined by the computer is created from the 3d data of the computer model, converting a complex surface made up of splines into a series of two dimensional tool sweeps. These tool sweeps leave a pattern on the surface, both from their shape and also the cutting action. A tool will often pass over the same point several times with the cutting path in a different plane to remove extra material, but as the material is removed the moiré interface patterns become a feature of the object. The way the object is produced, and it’s resultant ‘birthmarks’ are as important as the shiny smooth object that we visualise in the computer.

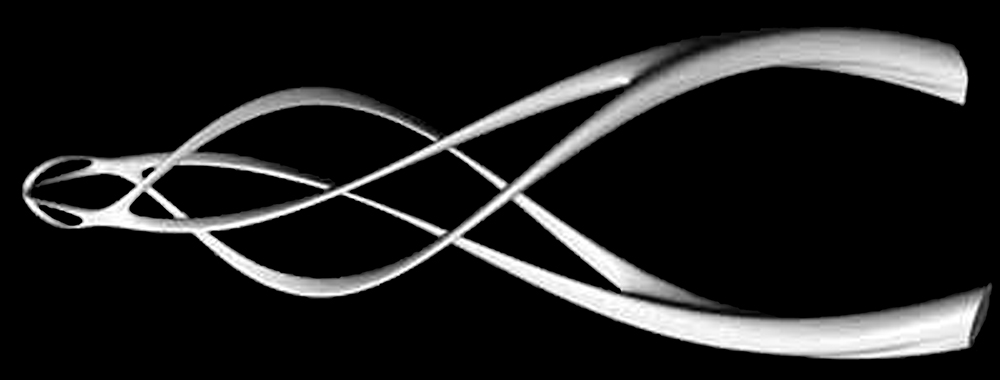

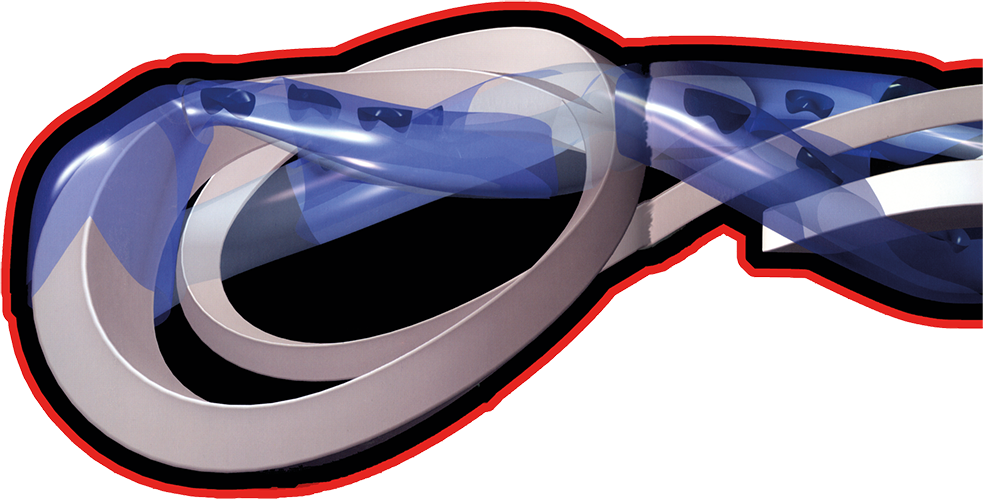

This unexpected, almost serendipitous result of the machine interface is carried through to other areas of Lynn’s design work. When one offsets a spline in a computer sufficiently, loops begin to appear in places of sufficient initial curvature, in most software these loops are then removed by ‘loop cutting’ algorithms because they are conventionally viewed as a software glitch. Lynn believes that in order to use software to it’s fullest you must understand what it is actually doing, then you can decide if it is working for you or against you. By switching this feature off, he has used the loop creation to design several buildings, including the Eyebeam Atelier Museum of Art and technology (competition entry in New York) and his own home in Venice Beach California. The looped splines are lofted and altered along a path and the intersections produce the defining edges of spaces.

Instalation at rendel & spitz : the gap. it experiments with surface finish as a decoration.[/caption]

Lynn also uses algorithms to his advantage. The Kleiberg housing block in Amsterdam (2002) is a refurbishment of an older housing project made in a single building. After careful consideration of the brief, an algorithm was written which subdivided the interior into neighbourhoods accessed by escalators. The escalators were then supported by a ribbed system that also sheltered them. The form of these ribs was defined partially by a kit of parts approach, and partially by an algorithm which interrogated the two ribs preceding it and defined its shape depending on the tension and inertia of the shape as the algorithm was run from left to right, and then from right to left to finally define their shape. This results in the surface of the building having a visually well balanced façade, whose design incorporates structural concerns and also fits the program perfectly

The two halves of this test board have the same shape in the computer, but it is interpreted differently by the cnc tool path program producing wildly different results

Lynn’s approach of combining the power of software with the intricacies of real world fabrication leads to some fascinating forms. The computer is essential to this all as its own idiosyncrasies as a medium are being explored rather than it being used to simulate a traditional process.

Lynn uses lofts of looped splines to define spaces

Conclusion

The desire that cities are currently showing to promote archi-tourism only serves to highlight the level of media coverage that unconventional architecture attracts. However the current climate surrounding digital design is notable now not for what the media covers, but for what it ignores. Nobody is writing articles about new buildings being drawn up using AutoCAD LT21 to produce neat drawings. The use of CAD for the production of drawings is now considered universal, using traditional methods is now deemed, as it is in so many industries, either a rejection of modern methods for a cultural or intellectual reason, or a lack of professionalism in not having the correct tools for the job. In the not too distant future, the use of three dimensional design tools such as Archicad22 or Architectural Desktop23 will be ubiquitous and therefore also deemed unworthy of media attention. One can only hope that eventually the cutting edge tools and methods will loose their newness and be adopted by more conservative practices. The competitive advantage of being able to produce finished designs in a few days by using parametric design programs, as demonstrated by the stadia designed by Arup Sport, will force the industry to pay attention and begin to adopt the methods.

There is a general fear of algorithms amongst architects who’s predominantly ‘artistic’ nature disposes them to be distrustful of technical things, but when one parallels parametric design with the technology of rendering then it becomes less daunting in the long term. Ten years ago rendering was a highly mathematical and very much programming based, requiring an in depth knowledge of the processes involved. These days there is an enormous level of automation already built into the software, making rendering extremely simple. Parametric design is intrinsically more complicated than rendering, as individual rule sets need to be formulated whereas, in rendering, once the way in which light behaves is codified it is just a matter of refining it, but as software advances and more tools are imbedded in the interface, it will become more instinctive. Structures is easily refined back to maths, maths to programming, programming to tools, these tools allow designers to work with the real thing, in real time.

The design possibilities offered by combining digital and analogue computing provide fascinating possibilities for producing beautiful elegant forms. Both specifically designed analogue computing devices (as used by NOX) and the study of biological entities (Emergence and Design Group) give an insight to material performance that was lacking throughout most of the modern movement. The important thing to consider when employing these methods is that in the production of biomimetic designs, the cell or plant structure is essentially diagrammatic and the designer must abstract the structural rule set and apply it in a recontextualised way. We should not be building towers that look like termite mounds or daisies, but rather that we use the structural and organisational techniques intrinsic to their implication.

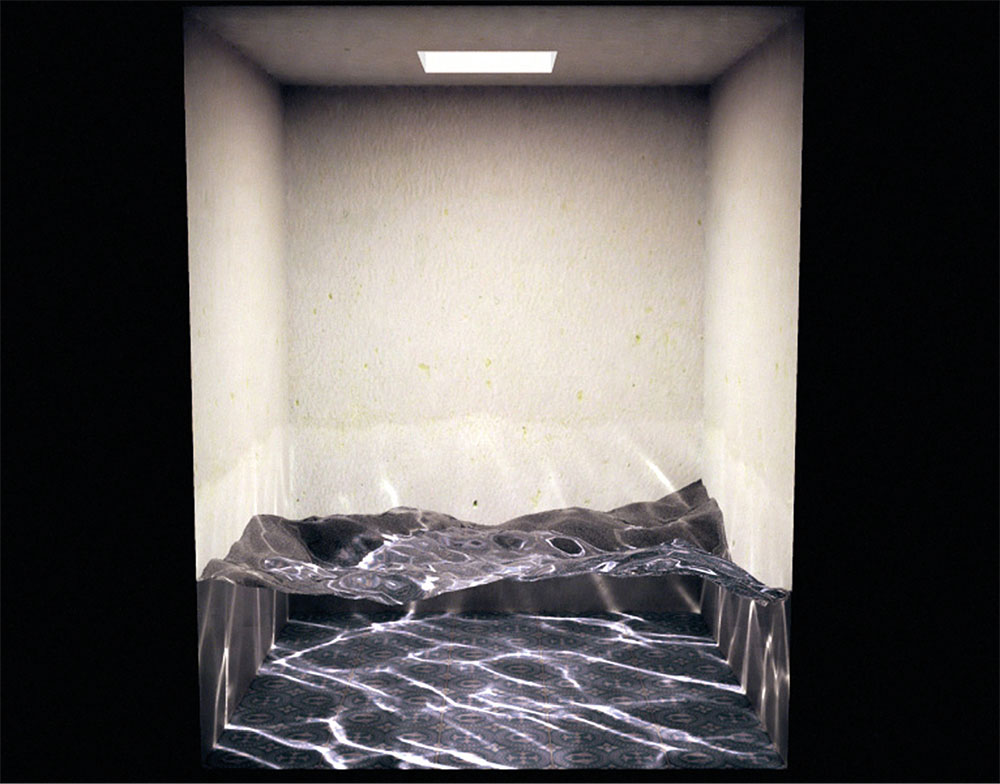

A rendered scene with animated water that refracts light. Ten years ago this would have been unthinkable.

Robert Aish of Bentley Systems draws a graph of the idealised design process, a straight line from inception to completion. He then overlays the realistic path taken by a design as its characteristics are modified, advanced, regressed and this path is anything but linear. The further along this path the design is, the less likely it is that problems will be solved in the ideal way, a damage control patch is the most likely eventuality. For instance, if the design has a complicated curved space-frame incorporating the entrance and the space-frame’s geometry is manually calculated, then the need for a minor change such as repositioning the entrance, would, in conventional workflow, most likely be ignored. The entrance would be left where it is and the landscaping changed, producing a less than ideal design due to time and budgetary constraints. However, if the space-frame was designed using a parametric algorithm, then moving the entrance would just require a subtle adjustment to the model and to click ‘recalculate’, and minutes later the change would be made. With parametric design, fairly large changes can be made right up to the last minute before the tool paths are sent to the fabricators.

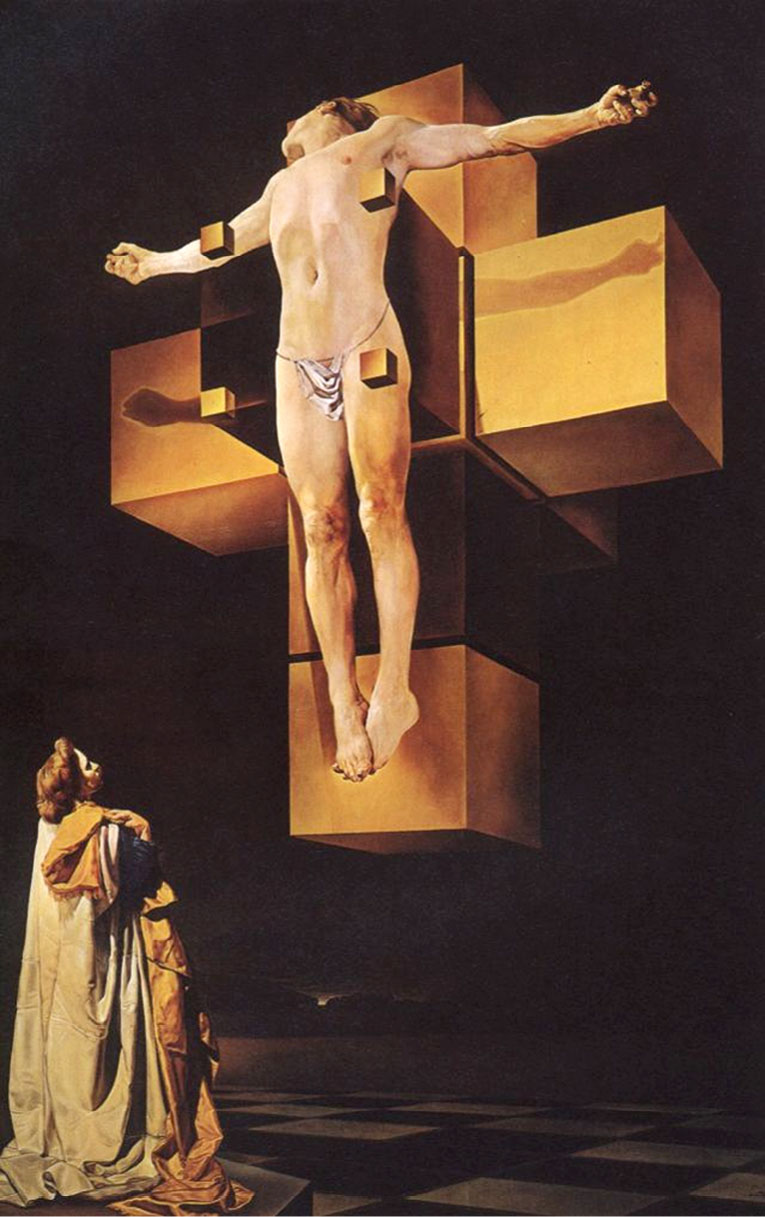

TODO

One of Gaudi’s hanging chain models

The notion that digital computers are making the inconceivable possible is somewhat inaccurate. The majority of complex forms created today have been possible (albeit in less advanced materials) for centuries. The Sagrada Família is made in both an incredibly complex and advanced form, but it has been constructed on a time scale and budget inconceivable with modern financial constraints. Modern computational methods make constructions like this infinitely easier: Greg Lynn’s definition of the difference between architecture and sculpture suggests architecture is defined by its use of components to create a whole24 and under this definition the Colossus in Rhodes was the first biomimetic piece of architecture. It was built over twelve years using hundreds of hand beaten bronze panels to form a gigantic form of the god Helios to guard the harbour. By using modern digital design and fabrication methods, the labour intensive techniques used to form the beaten panels, could be completely automated using an algorithm to cut up the whole figure into components and then add the connection details. The panels could be identified with barcodes to define their precise location and orientation to enable completely seamless onsite assembly. The forms can be outputted to a CNC machine to mill the moulds out of foam for an epoxy carbon Kevlar panel. Using modern digital workflow the colossus could be constructed in under a year.

As digital design becomes more widespread the current method of architectural education may need to change too, not necessarily to produce programmers or mathematicians at the expense of design skills, but nobody starts their design career with aspirations of spending their time doing door schedules, and if computers can be used to do the menial work then designers who can use them effectively will be freed up to spend more time designing. Indeed already there is a skills gap in digital design practitioners. Instead of the conventional idea of computer virtuosos being young upstarts, the main protagonists in the digital design field are all over 40 (Novak – 48, Lynn – 41, Gehry – 76.) They were all involved in computing relatively close to its inception when programming and understanding the workings of the computer were essential to operating the machine. This ‘insider’ knowledge has allowed them to manipulate the computer to do what they want as opposed to being constrained by the software developer’s built in settings. Since the introduction of Microsoft Windows and other GUI operating systems the level of abstraction and digital handholding has increased at the expense of an awareness of the underlying principles. As the total number of computer users has increased the number of people actually skilled enough to push software to its limits seems to be proportionately decreasing. This has no detrimental effect on the majority of computer users, but for innovation to occur there needs to be a certain level of understanding. This is beginning to be addressed through architectural education, but more and more digital design is likely to form a central part of an architecture syllabus. With practitioners such as Karl Chu, Greg Lynn and Lars Spuybroek being invited to teach and continue their research at universities, the education that some students receive is at the cutting edge, preparing them to take over as the next generation of innovators.

TODO

Dali : Collossus

The concept of architect as tool builder, one who designs the rules that the system adheres to and then lets the system (structure) grow and evolve into a finished form, is difficult to grasp for a generation weaned on the slightly megalomaniac paradigm of architect as deity, controlling all things, and generally compromising complexity to achieve a human level of involvement. Many fear that relinquishing control to the apparently serendipitous results of the computer will diminish the quality of the resultant design. One main concern is that as people publish new tools there will be an ensuing wave of buildings which have clearly used that tool in their design, just as student renderings often use identifiably standard texturing. This prejudice is justifiable but needs to be eradicated through a combination of education in ‘tool building’ and through general pressure from the design community as a whole to retain originality and reject cookie cutter projects. The worry that designs will loose their human touch and fall prey to ruthless computerised efficiency is, however, unfounded. The simple answer is that if the resultant solution is unsatisfactory, it can be ignored and the process started again, or one can return along the path taken to get to the present position, change a variable and re-run, exactly as if it were a manually conceived design. For architecture to continually evolve there needs to be a continual shedding of approaches. Marcus Novak makes the case succinctly when he asserts that the frank Gehry approach of manual modelling with subsequent computer construction was a fitting end to the twentieth century, but it has no place in the twenty first25

The one thing that makes digital design stand out from what has preceded it is it’s ability to deal with complexity. Everything that can be done with a computer is also possible without it - a computer is just a box with wires that does maths, and as such can be replicated, given sufficient time with a pen and paper. Time is the crucial factor however, because as Gaudí proved with the Sagrada Família, there isn’t enough of it to do the calculations in a human lifetime (he died with less than half the church completed). The concept of finite element analysis was invented before computers, but was never pursued because it was too complex to do any sort of meaningful analysis manually. Calculating the algorithms that govern an emergent system, or the polynomial and simultaneous equations that govern a surface in a parametric design rely on the power of computers to do the calculations in a split second. This acceleration of complexity drives new forms and new organisational and structural systems to become a reality.

The area of design that has been spawned by computers is designing architecture in cyberspace. If we are to continue our integration with the internet and mobile communications at our current rate of consumption then it is conceivable that we will soon begin to ‘inhabit’ the internet rather than merely viewing it as we do now. As every generation of architects struggles with the question “…but is it architecture?” ours has the digital frontier to cross, and the associated removal of restrictions will doubtless produce some fascinating work as well as some interesting questions about how we conceive and inhabit our comparatively restrictive physical world.

TODO

Alien Bio

[glossary]

Rapid prototyping

Rapid prototyping is a general term applied to a range of manufacturing techniques used to produce a prototype of a computer model. The reason that rapid prototyping is used as opposed to more traditional manufacturing techniques is that there is no requirement for making jigs, or working out complicated tool paths. All the methods take very thin sections of the model and then rebuild them, so essentially they are stacked on top of each other.

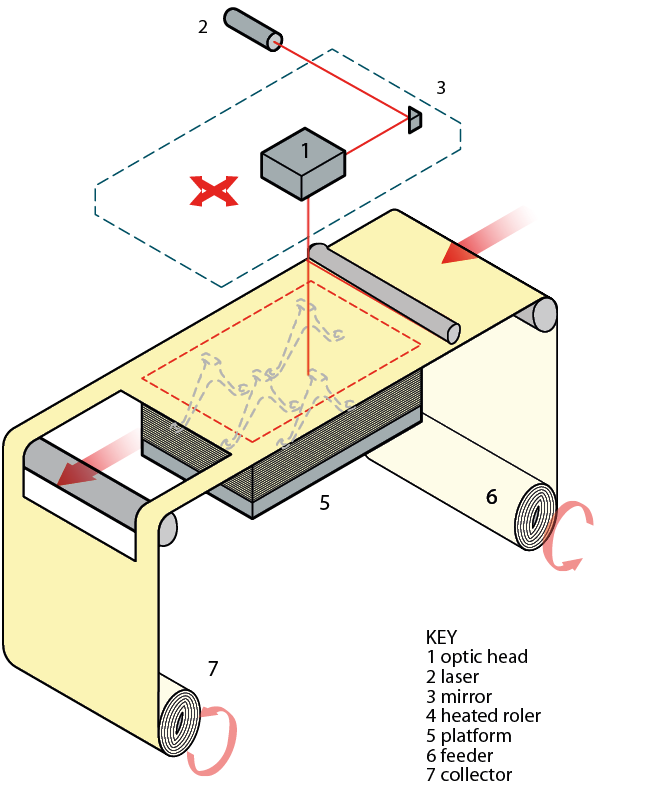

Selective Laser Sintering (sls)

A very thin layer of fine polymer or metal particles is welded together using a laser beam in the pattern defined by a section through the computer model, the platform they are resting is then lowered by the thickness of the particle size, a new layer of particles is deposited and levelled off with a roller, and the process is repeated. When the particles melt they become connected to their neighbouring particles on the same layer and to those below them. sls can be used to produce fully dense working prototypes.

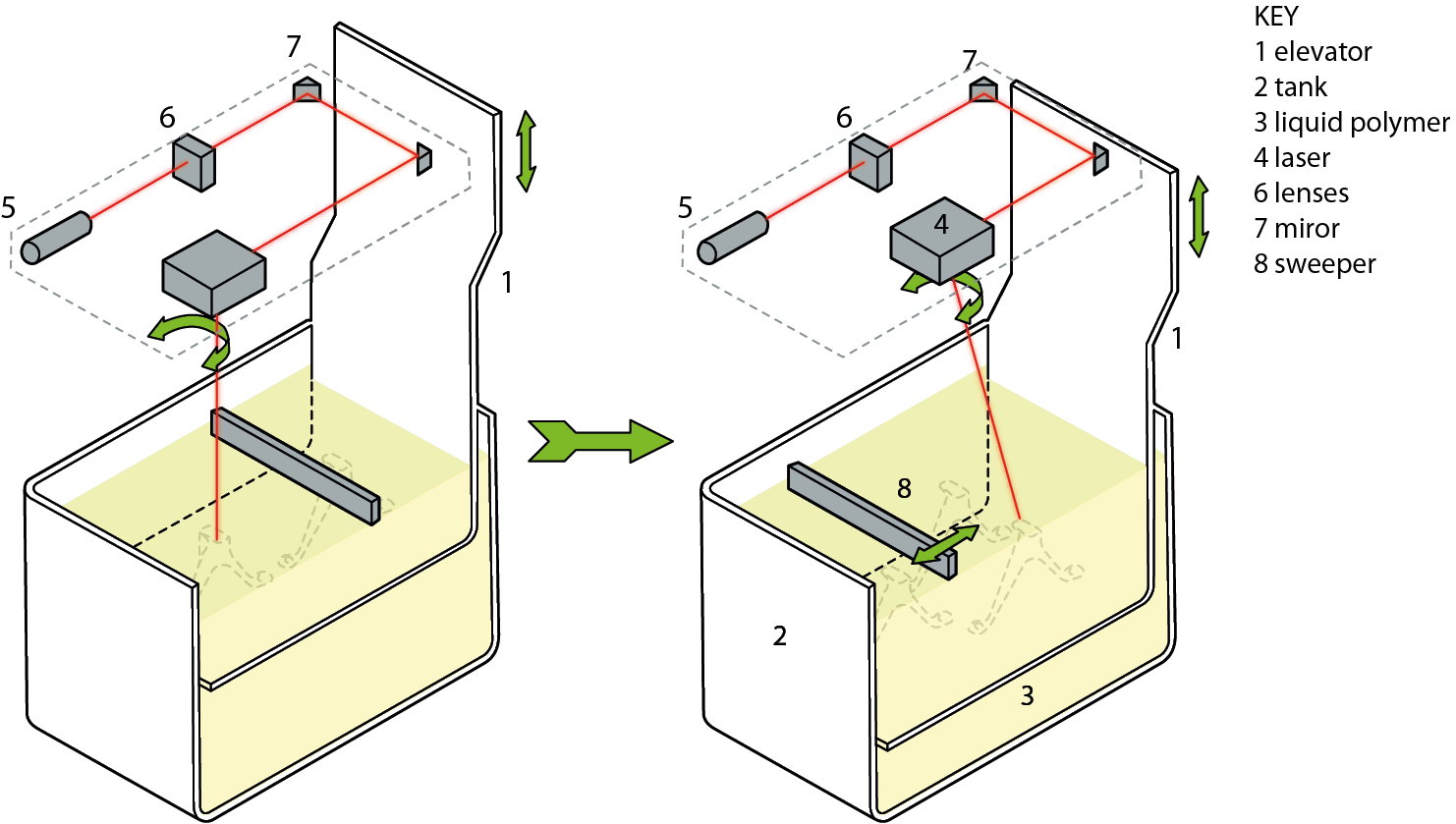

Three Dimensional Physical Printing (3dpp)

An inkjet print head sprays a binder onto a powder layer that is arranged in the same way as in sls and glues the particles together layer by layer. The print head has several possible ink types and these can be configured to produce sections of the model that are soluble, thereby producing sacrificial supports etc.

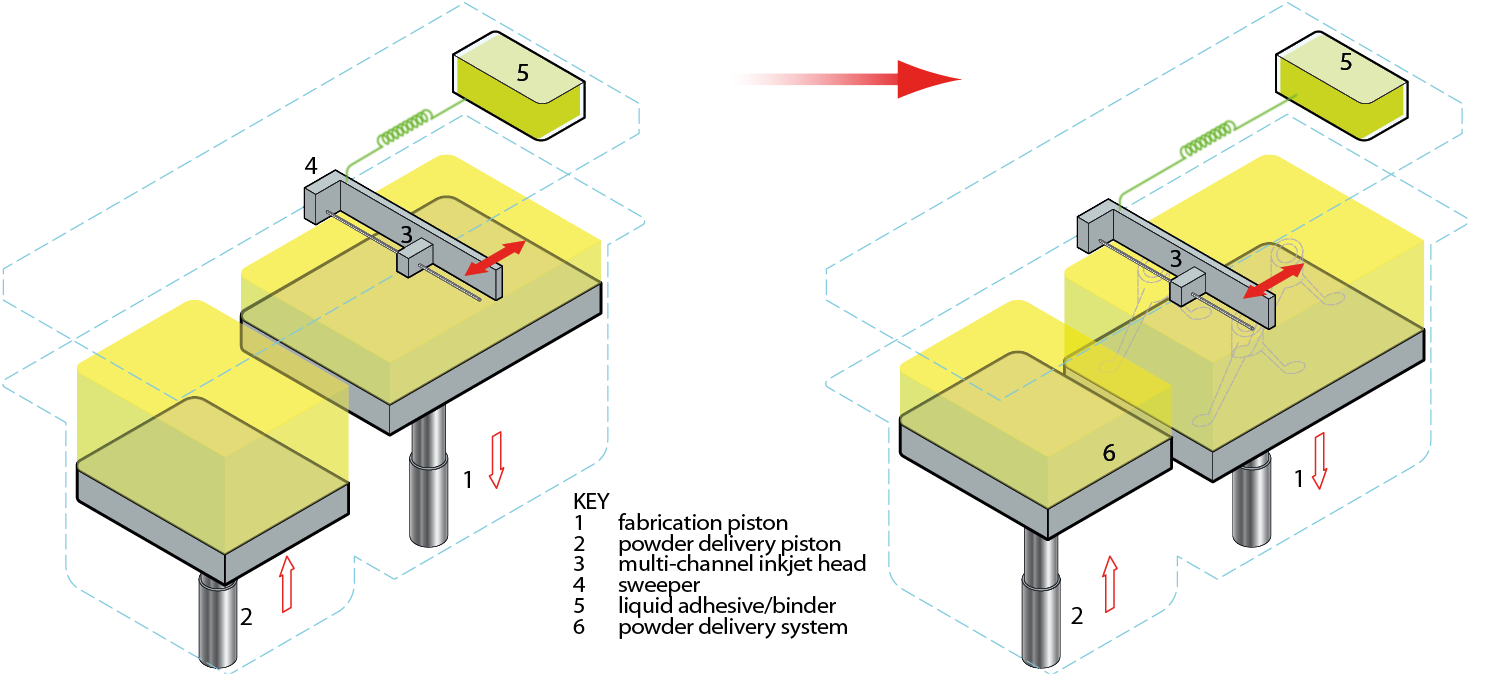

Laminated Object Modelling (lom)

Layers of self adhesive paper are rolled over the bed of the machine, and the outline is traced with a laser cutter, the remaining paper it then cut into a cross hatched pattern and can be broken off once the model is complete. This technique is relatively fast, but it is limited in its ability to produce models with interiors as the paper would be trapped inside, whereas with a powder based method one could make a cage with a ball loose inside as the powder would act as a support and hold the ball in suspension until completion when the model could be removed and shaken to remove the trapped dust.

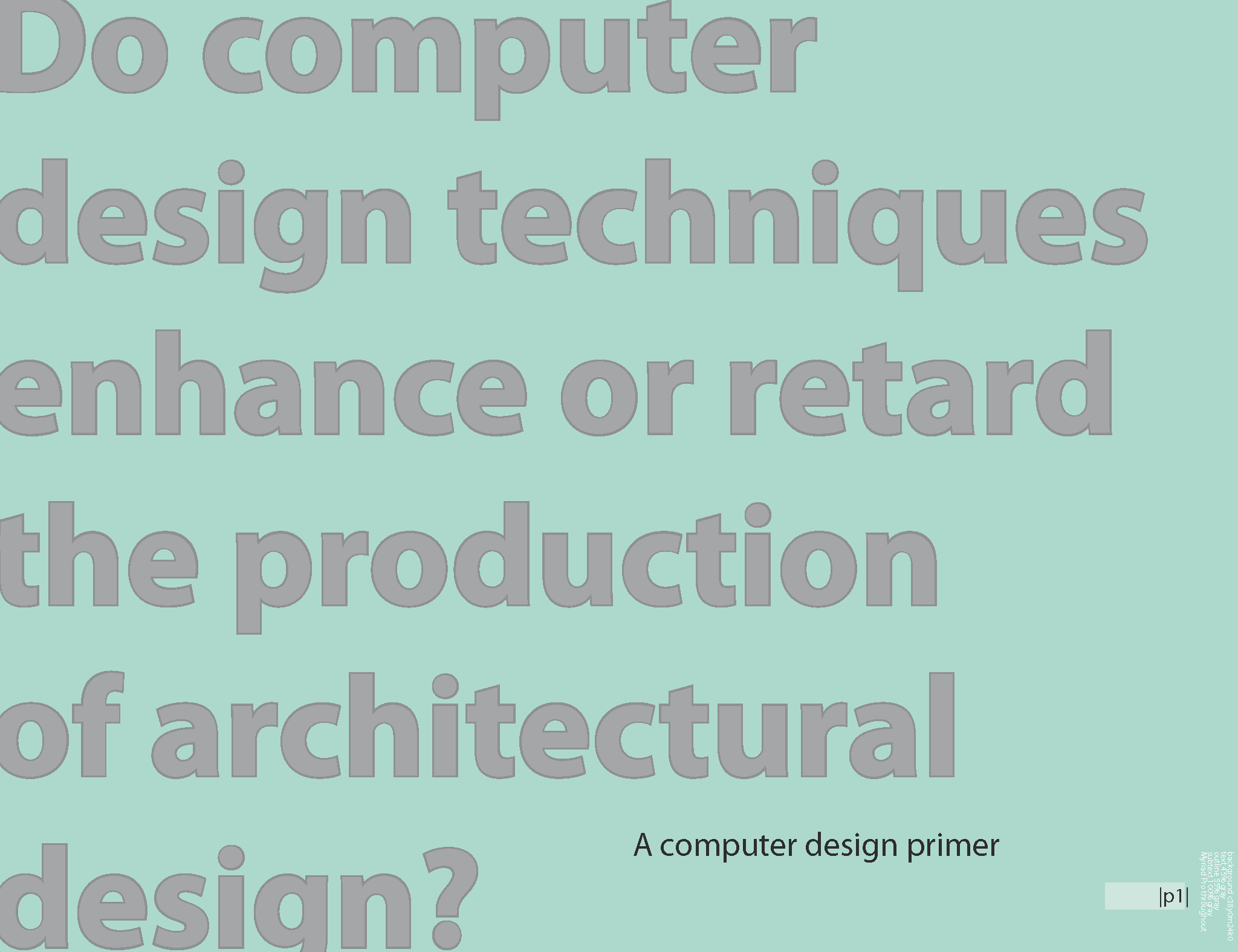

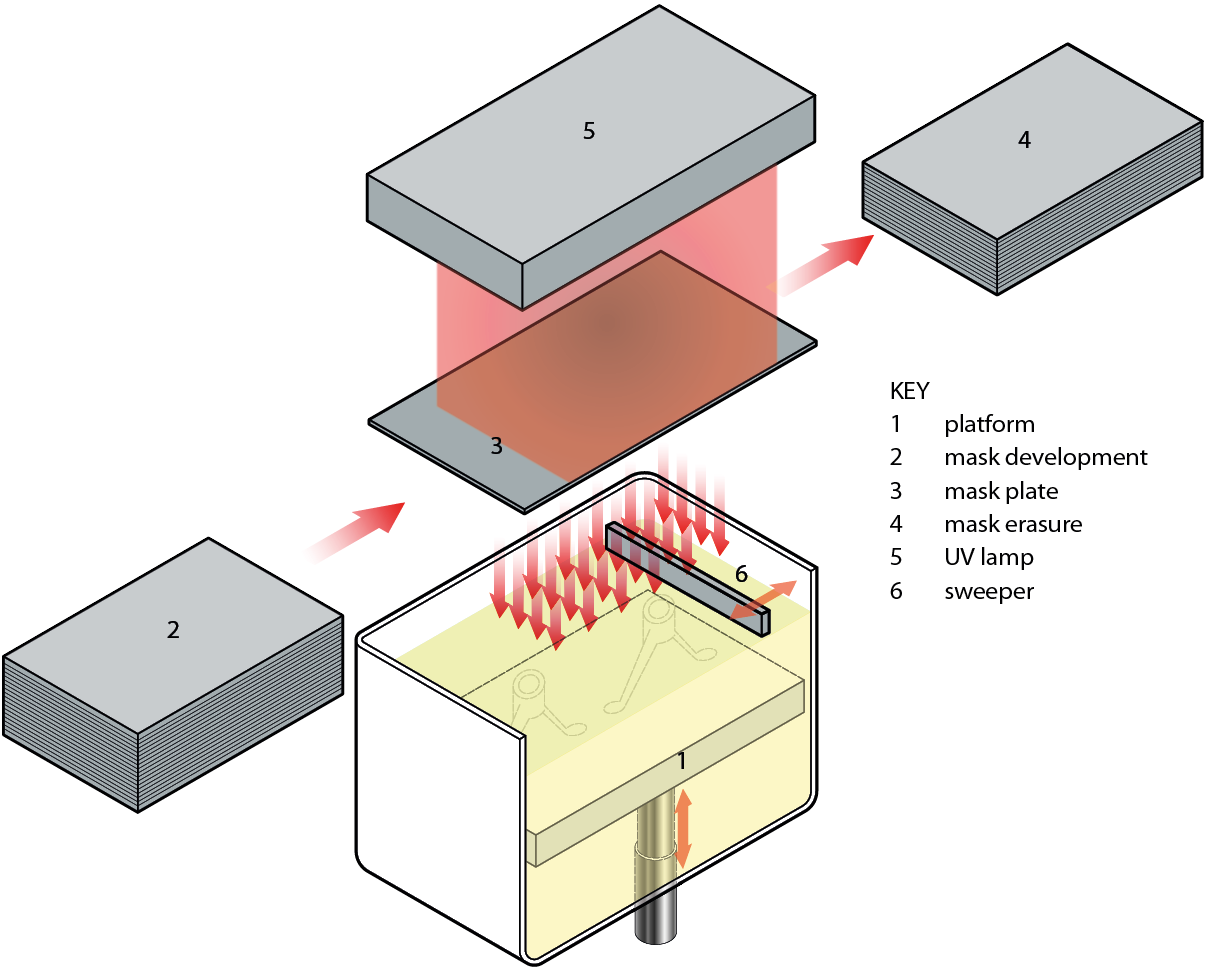

Stereolithography including Photomasking

A polymer gel that is cured by exposure to light is contained in a tank similar to the ones used in powder based methods, the laser then tracks the shape required and the platen is lowered one layer and the process is repeated. The gel can produce objects in various colours including a clear resin26. Photomasking uses the same system, but instead of the laser there is a large light covering the whole tank and a screen similar to the ones found in digital projectors that blocks the light where necessary.

Fused deposition modelling (fdm)

This method is similar to curled pottery or making things with glue gun glue. The deposition head extrudes a thin bead of pvc in the outline of the shape, and also in a crosshatch pattern in the interior for support, lowers the platen, and continues. As there is no powder or liquid to support the form as it is grown there is a secondary head which deposits a water soluble material that acts as a sprue.

Computer model

A computer model can take various forms; it can be purely mathematical, for example, the prediction of stock market trends, or the predictions of cod populations. In architectural terms however, the computer model is generally a representation in three dimensional virtual space of the intended form of the proposed structure. This can then have various physics calculations and geometrical transformations applied to it in order to achieve a structurally optimised or formally interesting design. The computer model can also be used to dramatically reduce design lead times and construction wastage by producing precise bills of quantities and doing, for instance, all the steelwork detailing, or automatically analysing egress routes for fire regulations. As the computer model is software based it can be passed around different specialists in its ‘soft’ form and they can modify the design reducing time consuming draughting. The building can be essentially perfect before it is committed to production.

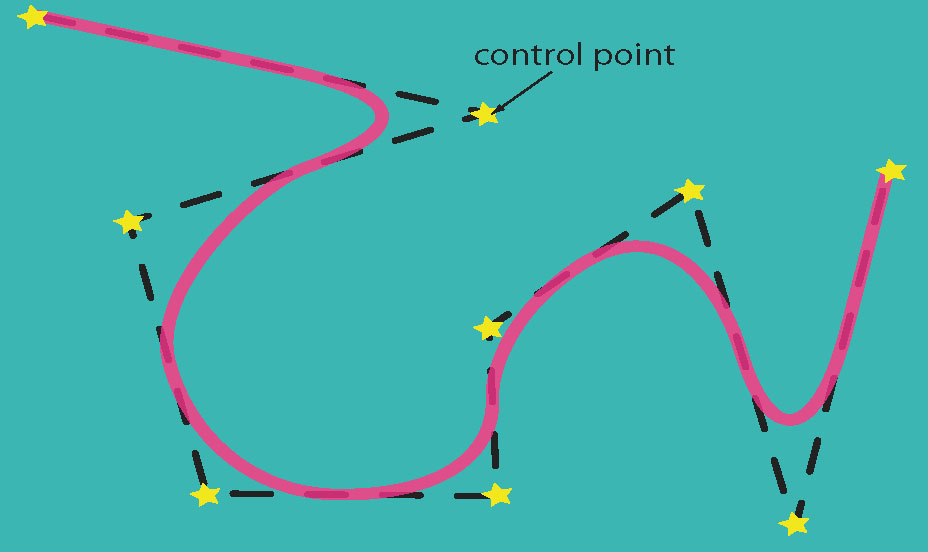

Spline

Splines are the most common method of defining curves in computer graphics. They were originally a thin piece of wood with weights attached to control the curves and were used in ship building to define the hull shapes. The weights have been replaced with control points which do the same thing in a computerised environment.

Topology

Put simply topology is a branch of geometry that isn’t concerned with scales or directions, but more with how things are connected together and if they have holes in them. A torus is a topological object because if scaled to an infinitely small scale the torus would always be bigger than the hole, and as such the torus would never disappear whereas a ball could continue to be scaled until it disappeared. The most commonly cited topological transformation is from a donut to a tea cup, as the donut already has a hole in it, it is simply a case of moving the matter around the hole to change the form. One side of the hole gets very thin and becomes the handle and the other side becomes much larger and gets a dent in the top and becomes the bowl. The donut and the cup are topologically identical27.

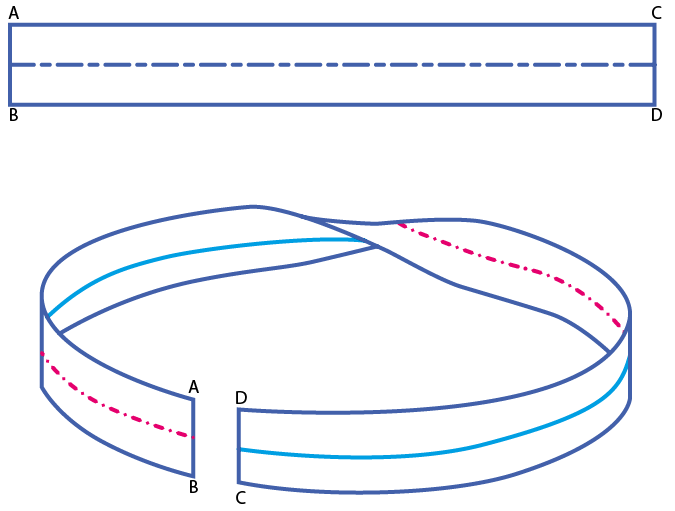

Möbius strip

The Möbius strip is a mathematical curiosity in that it is a topological surface with only one side. Discovered in the nineteenth century by August Ferdinand Möbius. It is often explained using a paper strip which has been given a half twist and stuck end to end in a loop. This description can lead to confusion when trying to conceptualise it later on as the paper strip is a thin three dimensional object, it has sides and is essentially a flattened torus, whereas the Möbius strip is actually a two dimensional object that exists in three dimensional space.

Klein Bottle

In 1882 Felix Klein devised a Möbius strip with an extra dimension, a Möbius surface essentially, this was eventually called a Klein Bottle. In contrast to the paper approximation of the Möbius strip provides great surprise and delight in those who encounter it, the Klein bottle is quite disappointing, the point where the surface intersects itself makes the three dimensional form seem not to work, however if you consider the Möbius strip to be a two dimensional surface that needs to exist in three dimensional space the Klein Bottle is a two dimensional surface that needs to exist in four dimensional space to work. The Möbius strip does it’s flip in the third dimension, allowing it’s special properties, and the Klein Bottle avoids it’s intersection in the fourth dimension37.

Virtual space

The way in which we conceptualise space is based on the relationship between the objects that surround us. In looking at a photograph or a painting we are constructing virtual space in our heads because we feel immersed in the medial experience, but it is really just a planar image. However the general use of the term is to describe a geometrically constructed ‘space’ created inside a computer. The advantage of computerised virtual space is that it has no limits, and can be made to be as multi dimensional as required, and scales aren’t a barrier to conception. A virtual space can also follow any geometrical rules desired, in the same way that Alice encountered unconventional space as she stepped through the looking glass , virtual space can allow us to experience, and the subsequently design in and inhabit another realm

Non-Euclidean geometry

Euclid of Alexandria (Greek: Eukleides) (circa 365–275 BC)38 wrote a book called ‘Elements’ in which he lays out his famous postulates, upon which all geometry was based until the first half of the nineteenth century when the fifth postulate was finally successfully challenged. Two dimensional Euclidean geometry is geometry on a flat plane, and all the postulates are true, but if the plane is hyperbolic then several of the postulates fail. The most commonly used example is of parallel lines, on a plane two parallel lines never meet, but, if two lines are parallel at the equator of a sphere then they will touch at the poles. In the curved plane these lines are still straight.39

Digital

Digitally encoded information is a stream of 1 and 0 (i.e. base 2) and various combinations of these mean different things. Virtually all electronic computers use binary systems. The disadvantage of digital information is that it is made up of discreet chunks, so it will measure in steps and interpolate the gaps.

Analogue

A system that has no graduations, and can therefore give an infinitely variable response. Data that is recorded in an analogue form is generally stored as a wave form, for example a record player reads analogue information off the records surface. A bent piece of paper is an analogue system because the paper can achieve an infinite number of forms with no steps in between

Computing

Computing is a mathematical or logical calculation; a task is computable if one can specify a sequence of instructions, a rule set, which when followed will result in the completion of the task. This set of instructions is called an effective procedure, or algorithm, and is what is fed into a computer as a program.

Computer

Generally a computer is conceived as a machine which takes an algorithm and applies it to a set of data, they are generally digital these days, but Charles Babbage’s difference engine is one of the first examples of a mathematical computer40. In an analogue computer however the algorithm is intrinsic to the materiality of the object, and the calculation is carried out by the interaction of forces and the device itself to give results. Analogue computers are generally used to calculate complicated catenary curves and three dimensional surfaces.

Iteration

A process of constant refinement to discover a result. Similar to filing a peg to fit in a hole, but in a mathematical way. An equation will roughly calculate a figure, then it will recalculate it using the error or variation to hone the result, therefore tending towards perfect.

Blob

An amorphous three dimensional shape generally associated with early digital architecture, also called isosurfaces

TODO slice and dice image

cad

computer aided design, a fairly generic term for any computer process that is used in design, but it generally refers to the use of a program such as AutoCAD for making drawings.

caad

computer aided architectural design. Features such as greater automation of laborious tasks such as producing bills of quantities, and the addition of symbol libraries of conventional architectural objects (doors, walls, windows) make the production of conventional architectural designs very quick.

cam

computer aided manufacture, any manufacturing procedure that is aided by a computer, this could range from very simple computer controlled lathe paths to controlling a laser sinter station.

TODO RP version of slice and dice image

catia

a computer program produced by a French company called Dassault Systemes, it was initially used to design fighter jets because of its advanced handling of curved surfaces. It’s real power lies in it’s massive capacity for customisation, there are now plug-ins for virtually all industry sectors allowing designers to sculpt organic forms for packaging design, or exact fuselage shapes for fighter jets, then make virtual assemblies of components, analyse stresses, design fabrication methods, mould shapes and ribs, etc. The possibilities of it are huge, the only thing holding it back is it’s prohibitive price, an average set up cost for one workstation is at least £20 000.

cnc

computer numerical control. Computer controlled manufacturing machines are described as cnc machines because a set of numerical instructions describes the tool paths. Initially these were set out on punch cards, but these days they are all digital. The most useful machines as far as architectural applications are concerned are five axis milling machines, these have three linear axis – two horizontal, and one vertical, and two rotational axis or planes. This allows the head to rotate to present different parts of the tool to the surface, which allows for complex geometry, and faster runs as the job needs fewer clamping changes and the tool head can be moved out of the way if necessary.

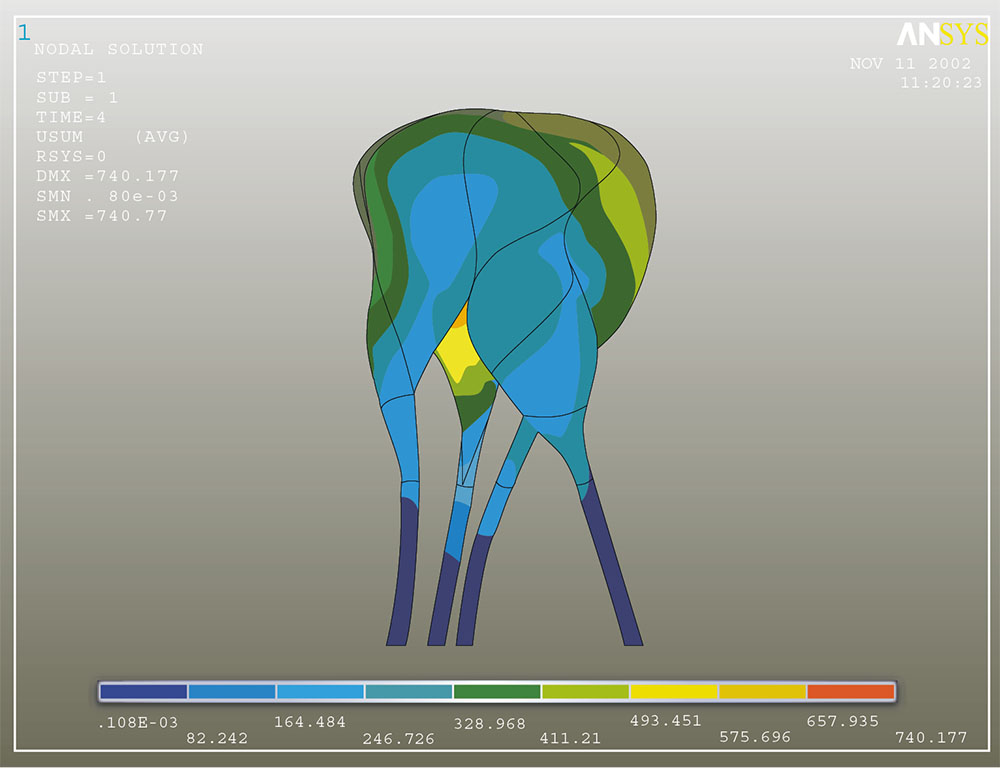

Finite element analysis - fea

This is a method of calculating the effects of a physical intervention (stress, heat, etc.) on an object. Invented in 194328 it was used for very limited analysis of deformations, it essentially involves breaking the object into sections, finite elements. Then by using a very complex matrix and even more complex series of calculations on that matrix the interaction between the elements is established. fea was invented before the advent of digital computers, didn’t really take off until the advent of super computers due to the huge calculations involved in producing results. The main fea program these days is ansys19, this was used to calculate the structure and apply forces such as wind loading to the nox D-Tower.

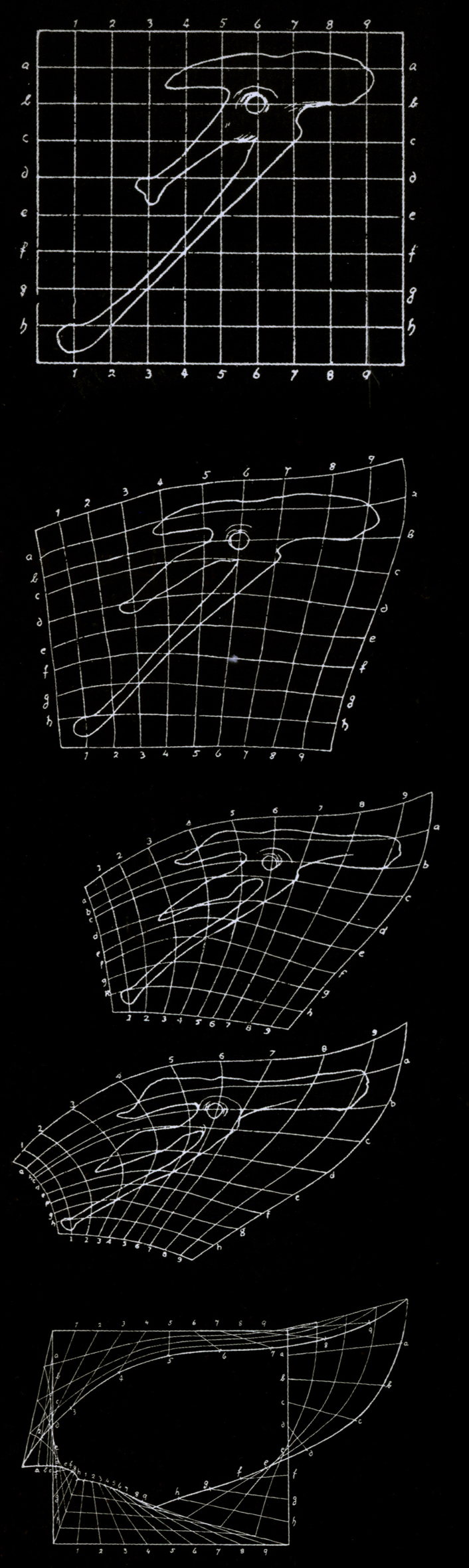

Morphing

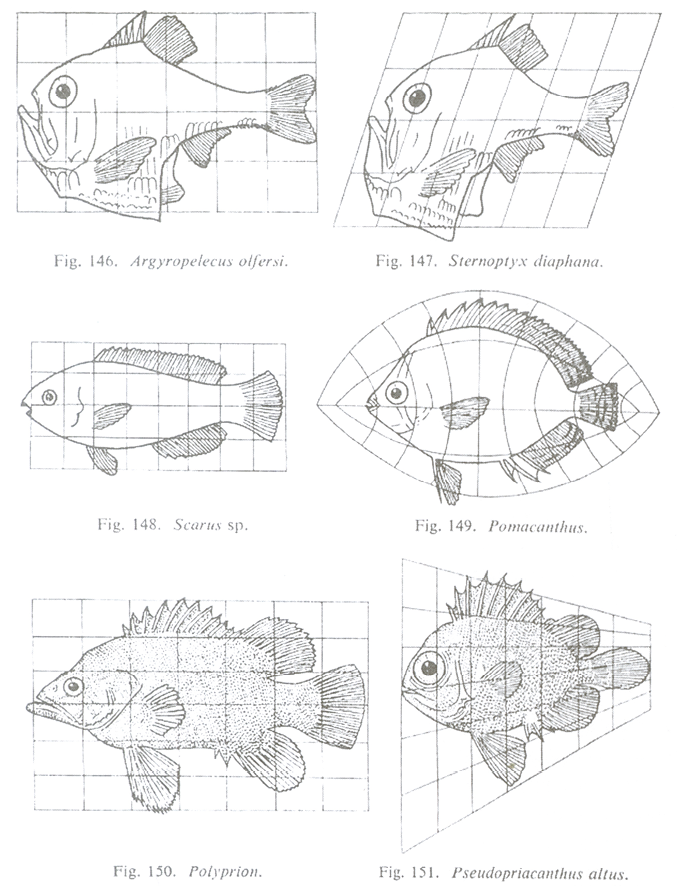

A procedure that first gained fame in the 1991 film Terminator 2–Judgement Day20 as the metal terminator turned into a pool of molten metal and then back into a humanoid robot. First described by D’arcy Thompson in his 1918 book ‘on growth and form’ where he demonstrated the evolutionary lineage of skeletal parts by distorting a grid over them, and then continuing the distortion to predict the next step.

Parametric design

method of design where the dimensions of an object are defined, not as an absolute numerical value, but in relation to another value, for instance a rectangle where the one side is x and the other is 2x. As parameters can be fixed or limited parametric algorithms can be linked to configurations and used to mass-customise items such as running shoes29 or even houses30.

Artificial intelligence